Yann Neuhaus

Manage Feathers.js authentication in Swagger UI

In addition to my previous articles Add a UI to explore the Feathers.js API and Add authentication in a Feathers.js REST API, today, I will explain how to manage Feathers.js authentication in Swagger UI and make it simple for our users.

After enabling authentication on API methods, as a result, using Swagger UI for testing is difficult, if not impossible. To solve this problem, Swagger needs to support authentication.

In this article, I will reuse the code from my previous articles.

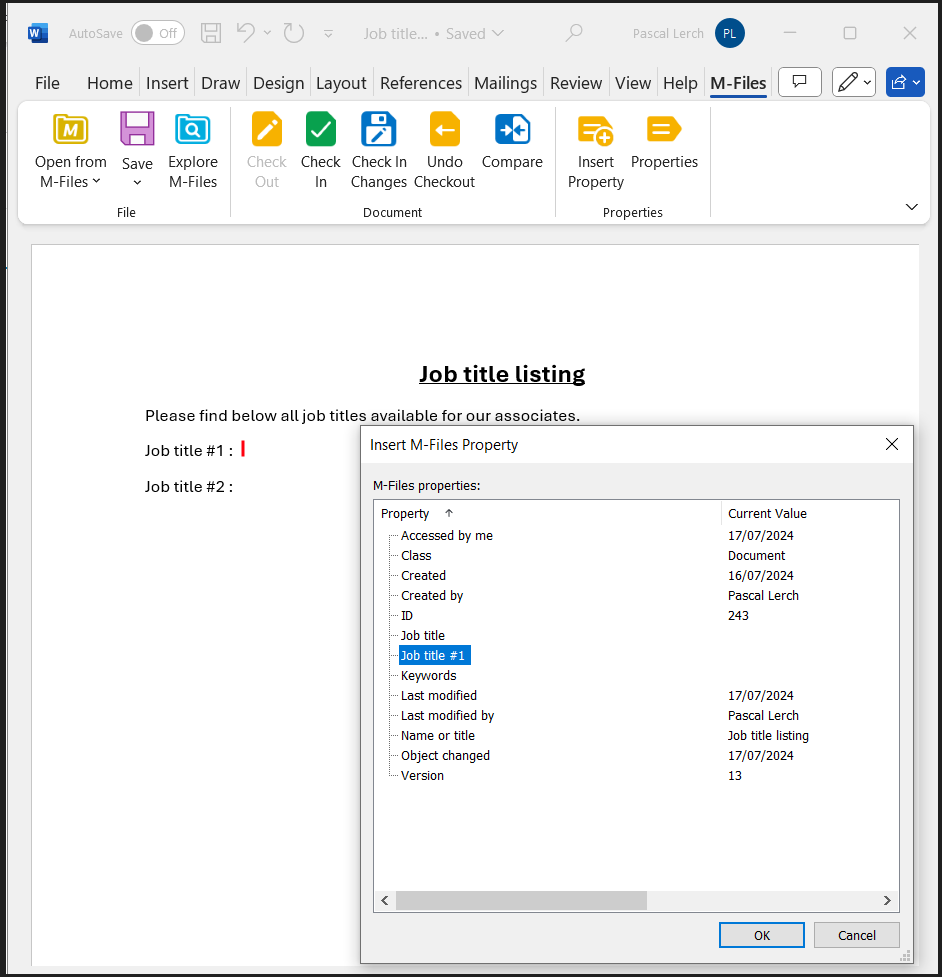

First step: add authentication specifications to the applicationFor Swagger UI to handle API authentication, I need to update the specifications in the app.ts file. Therefore, in the specs object, I add the components and security parts:

app.configure(

swagger({

specs: {

info: {

title: 'Workshop API ',

description: 'Workshop API rest services',

version: '1.0.0'

},

components: {

securitySchemes: {

BearerAuth: {

type: 'http',

scheme: 'bearer',

},

},

},

security: [{ BearerAuth: [] }],

},

ui: swagger.swaggerUI({

docsPath: '/docs',

})

})

)This new configuration, general to the API, tells Swagger that authenticated methods will use a Bearer token.

Second step: define documentation for authentication methodsThe authentication service generated by the CLI does not contain any specifications for Swagger UI, which results in an error message being displayed on the interface. So I’m adding the specifications needed by Swagger to manage authentication methods:

export const authentication = (app: Application) => {

const authentication = new AuthenticationService(app)

authentication.register('jwt', new JWTStrategy())

authentication.register('local', new LocalStrategy())

// Swagger definition.

authentication.docs = {

idNames: {

remove: 'accessToken',

},

idType: 'string',

securities: ['remove', 'removeMulti'],

multi: ['remove'],

schemas: {

authRequest: {

type: 'object',

properties: {

strategy: { type: 'string' },

email: { type: 'string' },

password: { type: 'string' },

},

},

authResult: {

type: 'object',

properties: {

accessToken: { type: 'string' },

authentication: {

type: 'object',

properties: {

strategy: { type: 'string' },

},

},

payload: {

type: 'object',

properties: {},

},

user: { $ref: '#/components/schemas/User' },

},

},

},

refs: {

createRequest: 'authRequest',

createResponse: 'authResult',

removeResponse: 'authResult',

removeMultiResponse: 'authResult',

},

operations: {

remove: {

description: 'Logout the current user',

'parameters[0].description': 'accessToken of the current user',

},

removeMulti: {

description: 'Logout the current user',

parameters: [],

},

},

};

app.use('authentication', authentication)

}The authentication.docs block defines how Swagger UI can interact with the authentication service.

Third step: add the security config into the serviceAt the beginning of my service file (workshop.ts), in the docs definition, I add the list of methods that must be authenticated in the securities array:

docs: createSwaggerServiceOptions({

schemas: {

workshopSchema,

workshopDataSchema,

workshopPatchSchema,

workshopQuerySchema

},

docs: {

// any options for service.docs can be added here

description: 'Workshop service',

securities: ['find', 'get', 'create', 'update', 'patch', 'remove'],

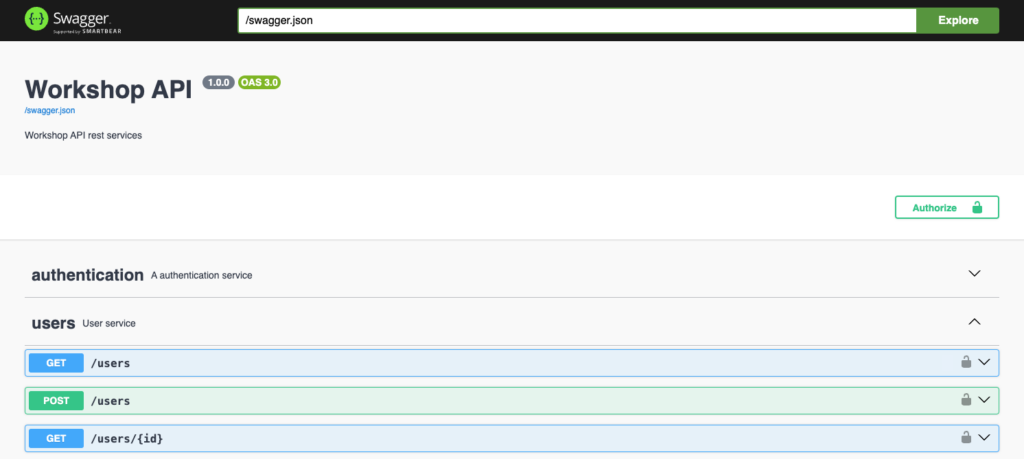

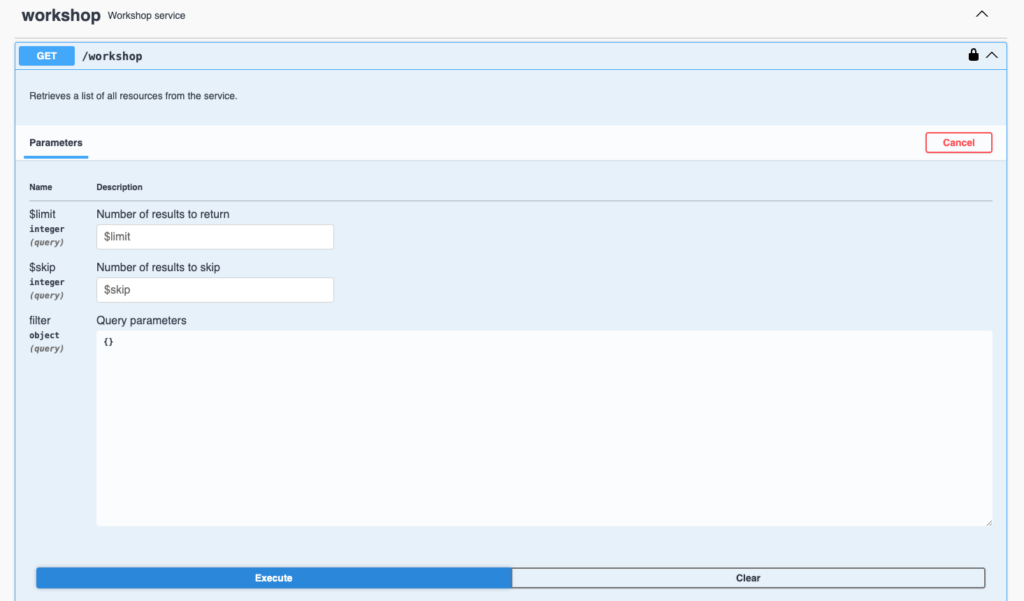

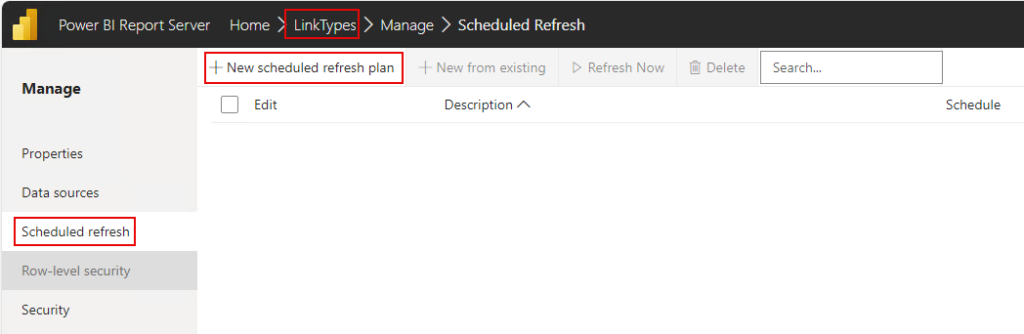

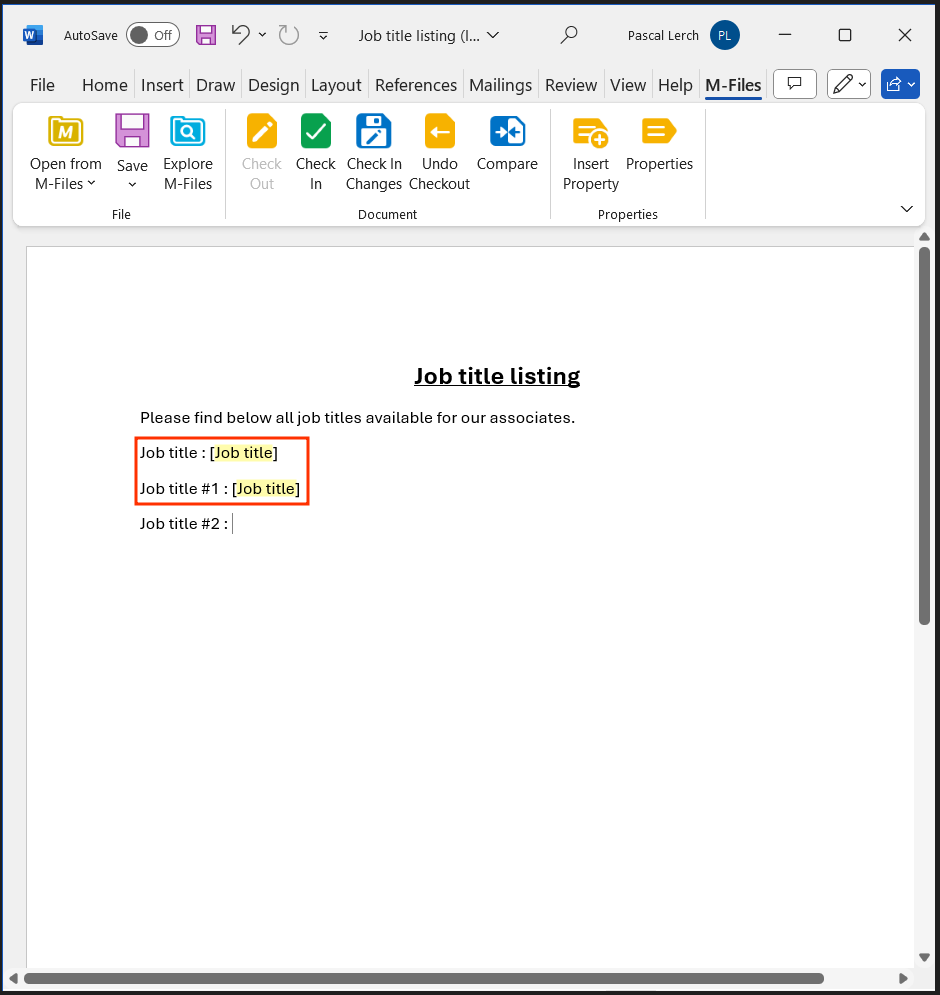

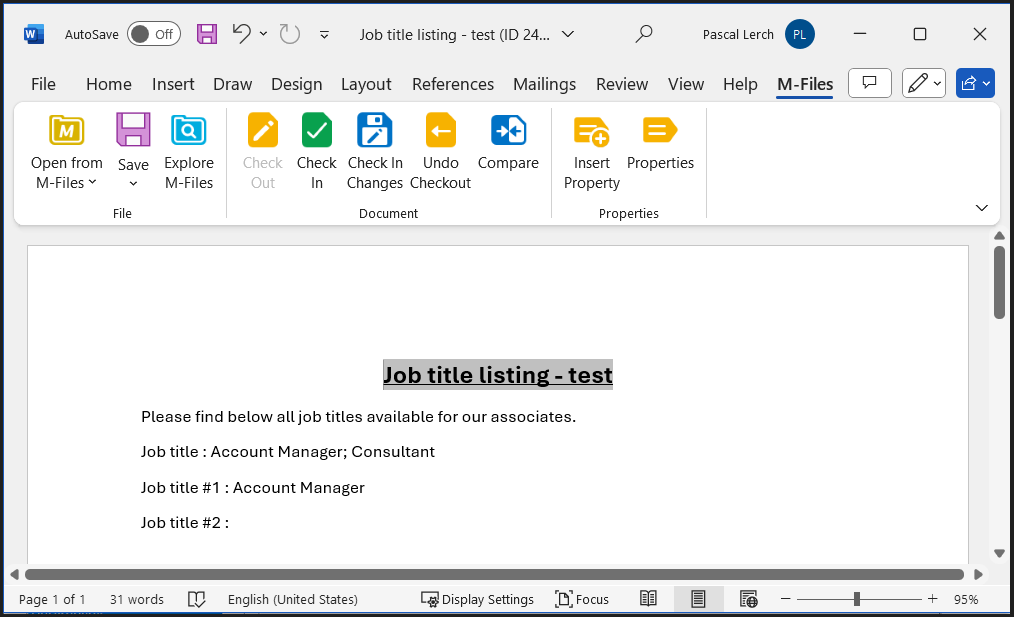

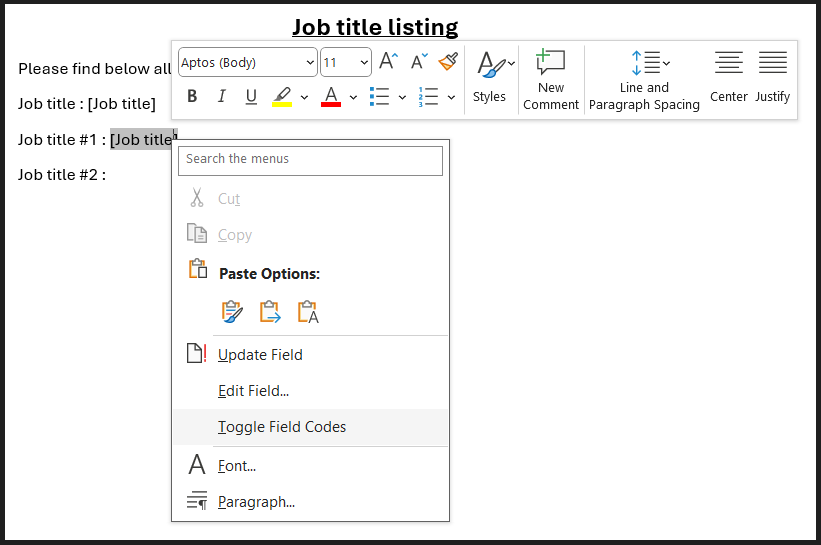

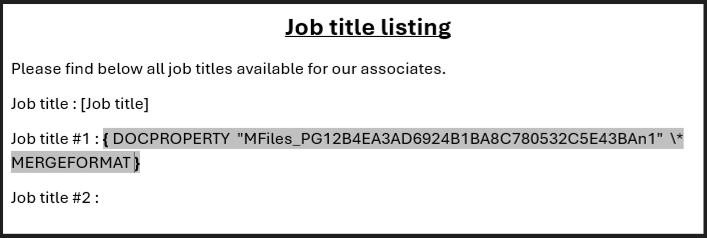

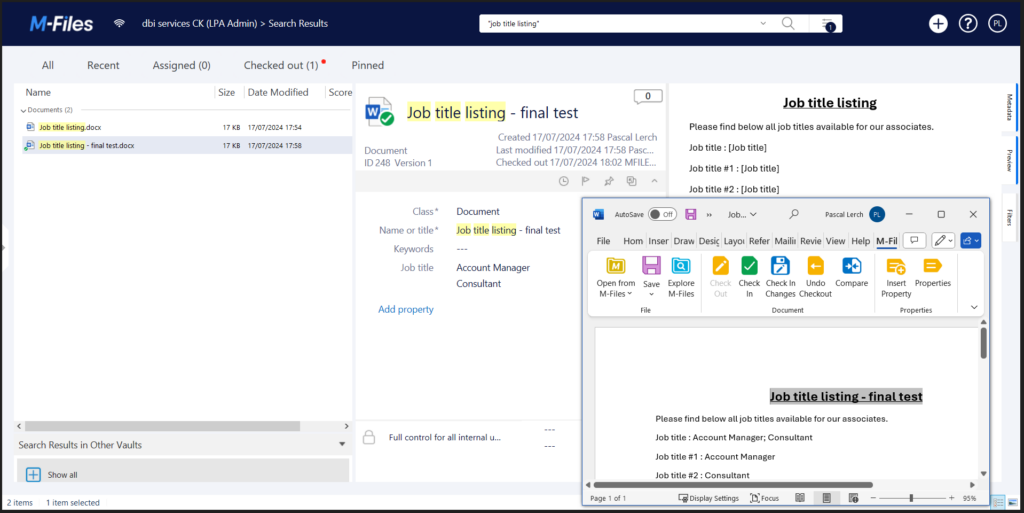

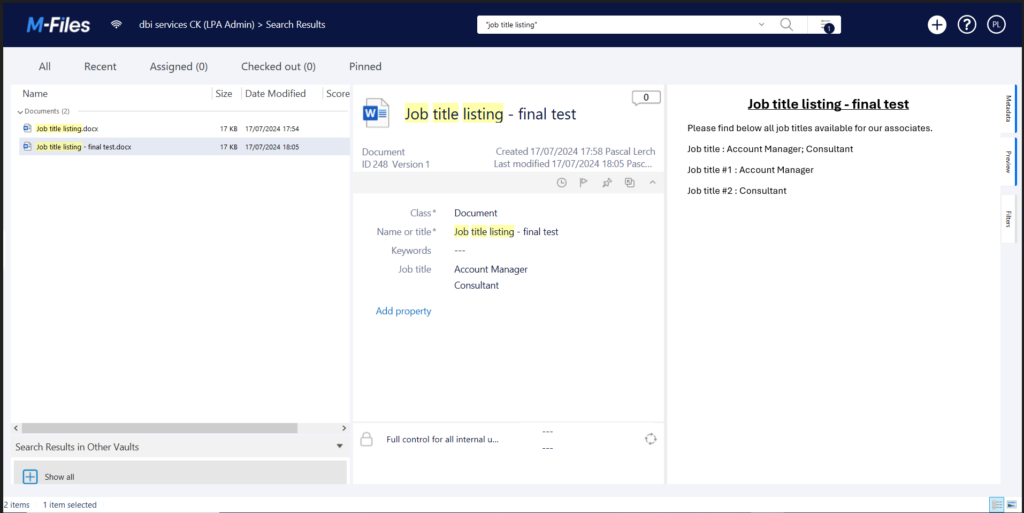

}As a result of the configuration, new elements appear on the interface:

- The Authorize button at the top of the page

- Padlocks at the end of authenticated method lines

- A more complete definition of authentication service methods

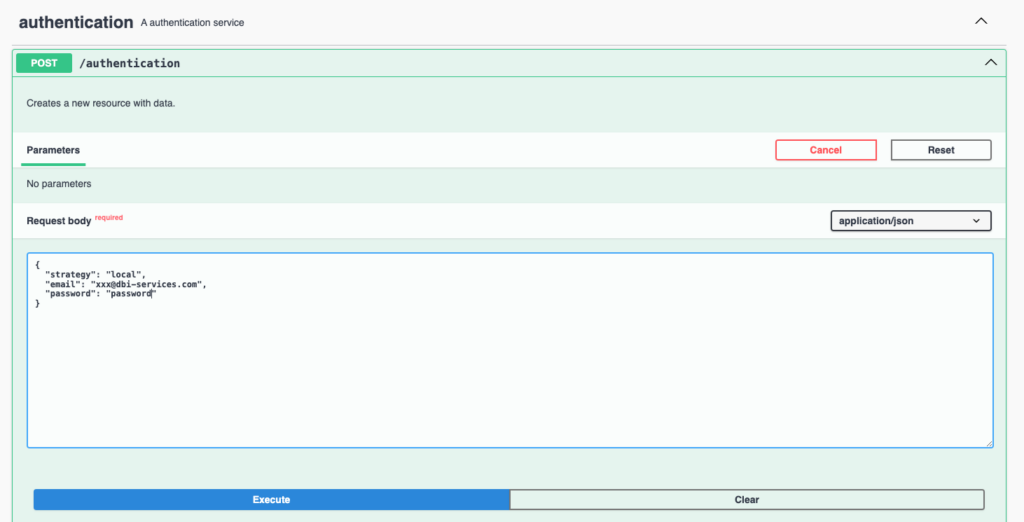

Obtain an access token with the UI

Obtain an access token with the UI

First, I need to log in with my credentials to get a token. After that, the bearer token will be used in the next authenticated calls:

The JSON object sent in the request body contains the identification information. In return, we’ll get an accessToken useful for the next calls:

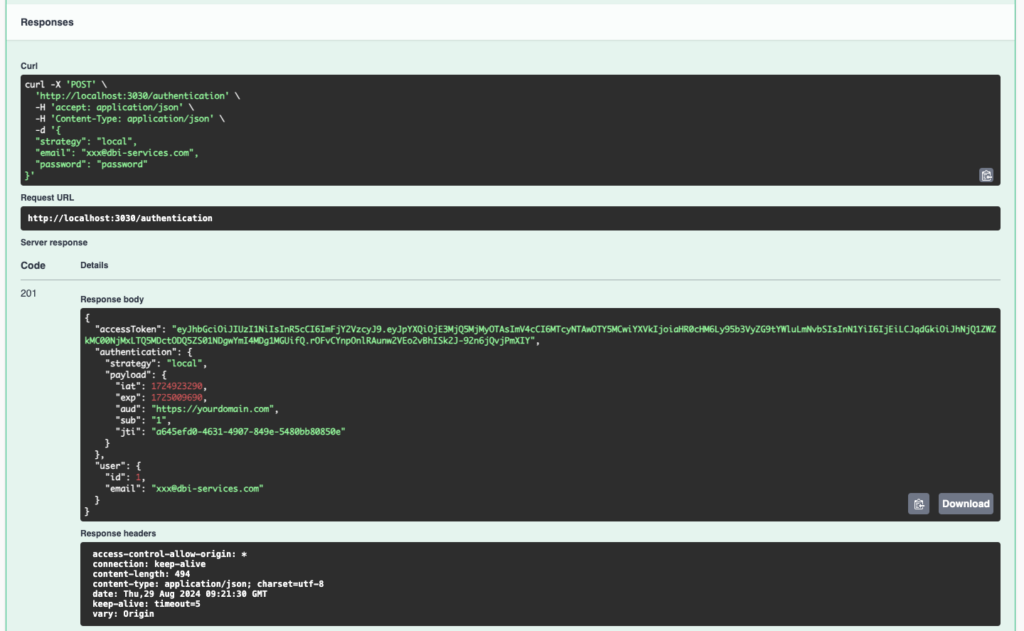

Second step, define the bearer token for authenticated requests

Second step, define the bearer token for authenticated requests

Each call to an authenticated method requires the token to be sent in the http headers. We therefore register the token in Swagger UI by clicking on the Authorize button and paste the token obtained during the authentication step:

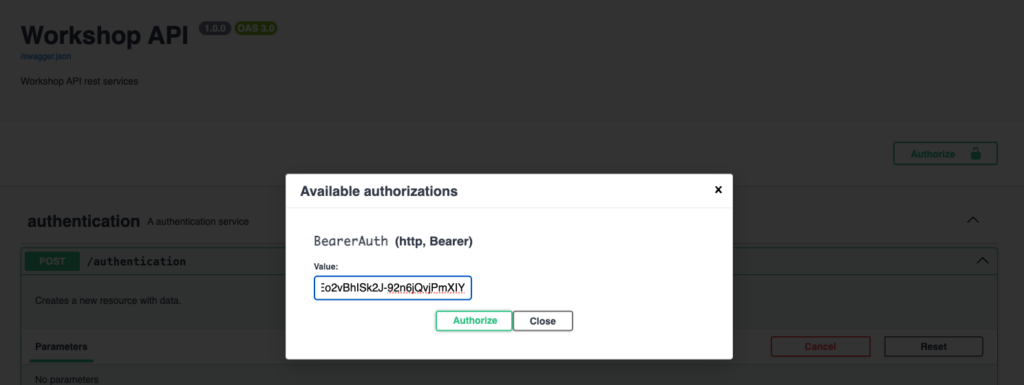

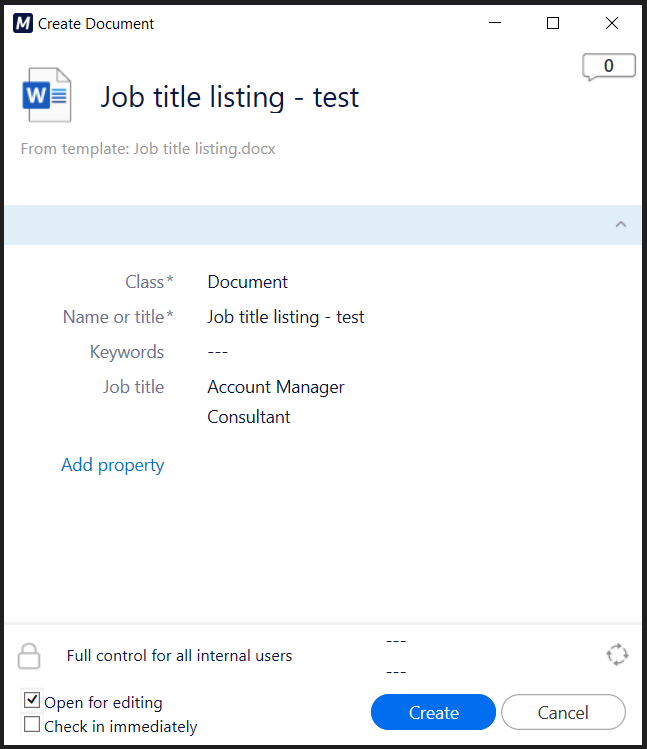

Testing authenticated methods

Testing authenticated methods

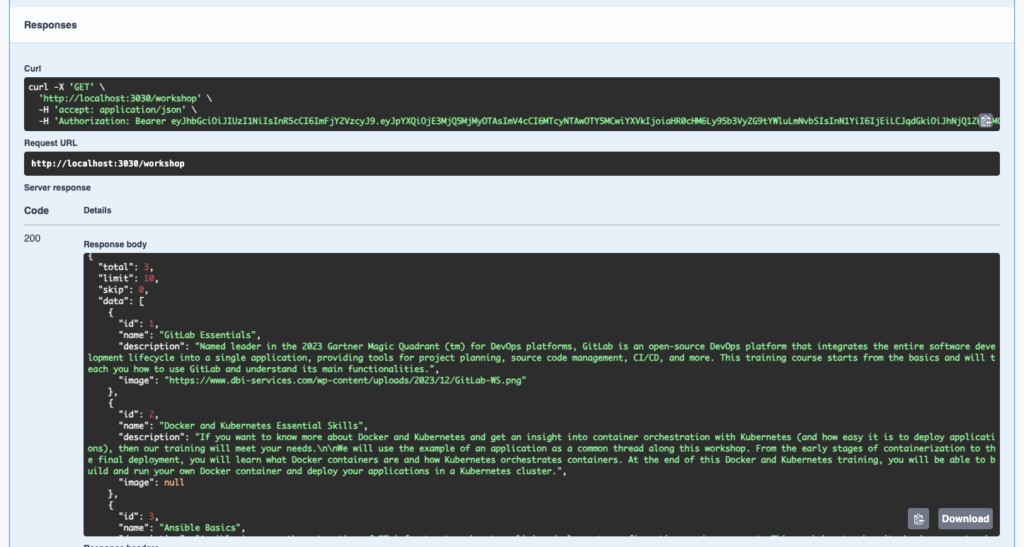

Once the token has been entered into Swagger UI, I use the methods without worrying about authentication:

Swagger UI sends the token in the http headers to authenticate the request. Then the api returns the data:

Conclusion

Conclusion

Managing Feathers.js authentication in Swagger UI is entirely possible. With a little extra configuration, it becomes easy to use authentication and authenticated methods. It’s even simple to test all API methods and document their use.

L’article Manage Feathers.js authentication in Swagger UI est apparu en premier sur dbi Blog.

“Edit Top 200 rows” does not work between SSMS 18.12.1 & SQL Server 2022

Recently after a migration from SQL Server 2012 to SQL Server 2022, a end user contact me because SQL Server Management Studio (SSMS) does not work anymore when he want t o edit rows…

I was surprised and do a test…

The version of SSMS is 18.12.1 and it’s a version coming out the 21.06.2022…

It’s the last version before the version 19. More information about the ssms verions here

I install this version of SSMS and an instance SQL Server 2022 named inst01 with the database

AdventureWorks2022.

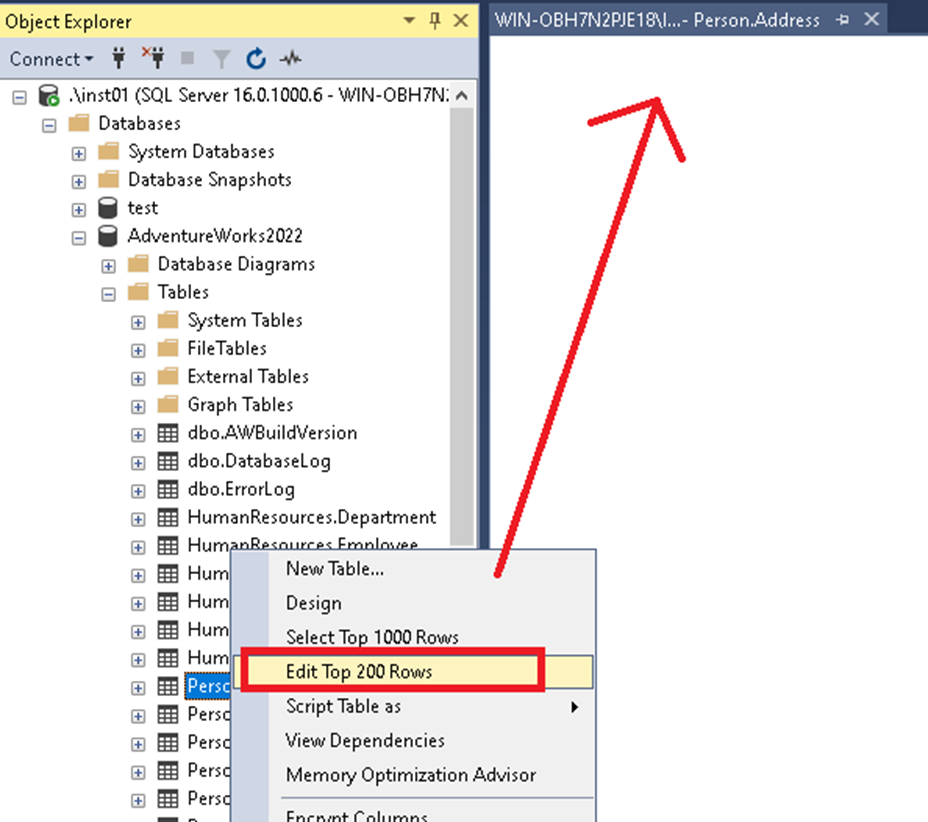

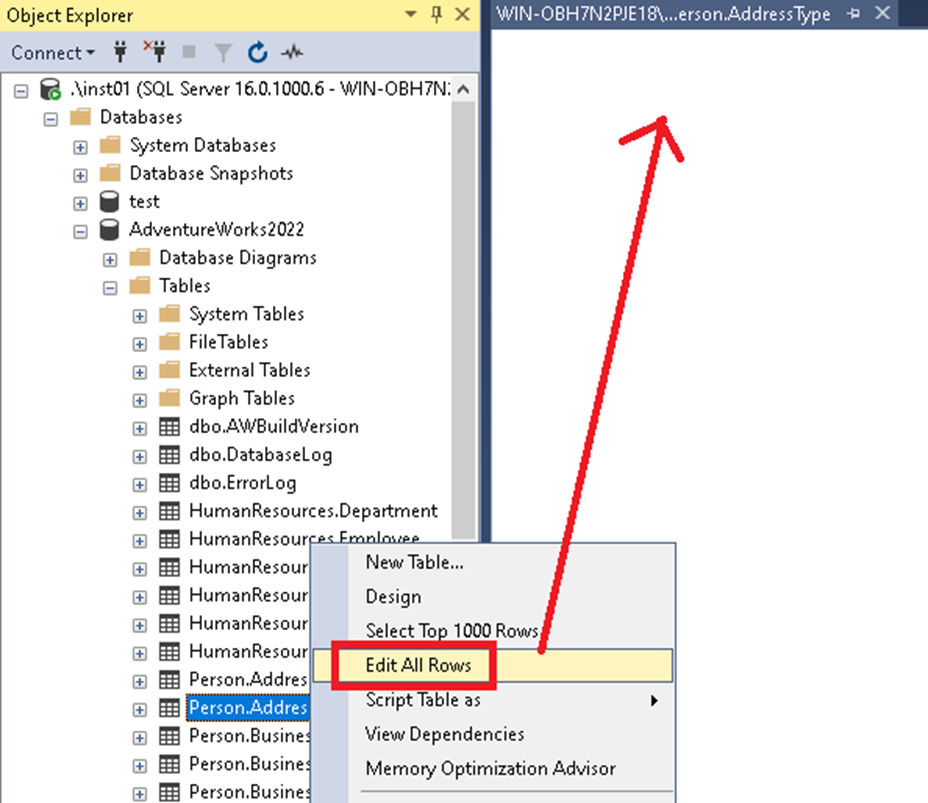

When I go on a table through the Object Explorer, and right click on “Edit top 200 rows”, I have am empty query window:

No error message and nothing can be done.

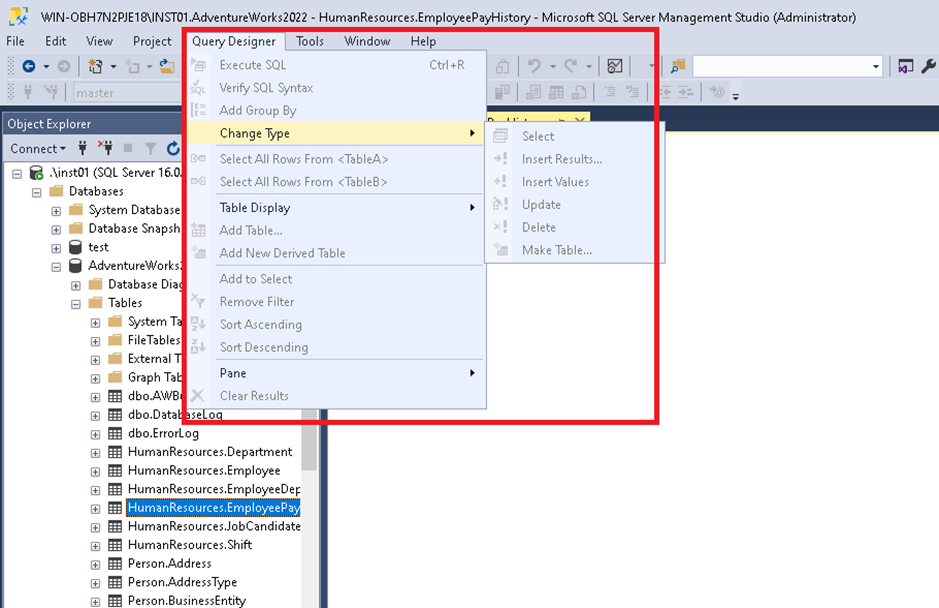

I searching in the Query designer to see if I can change something but all options are disable:

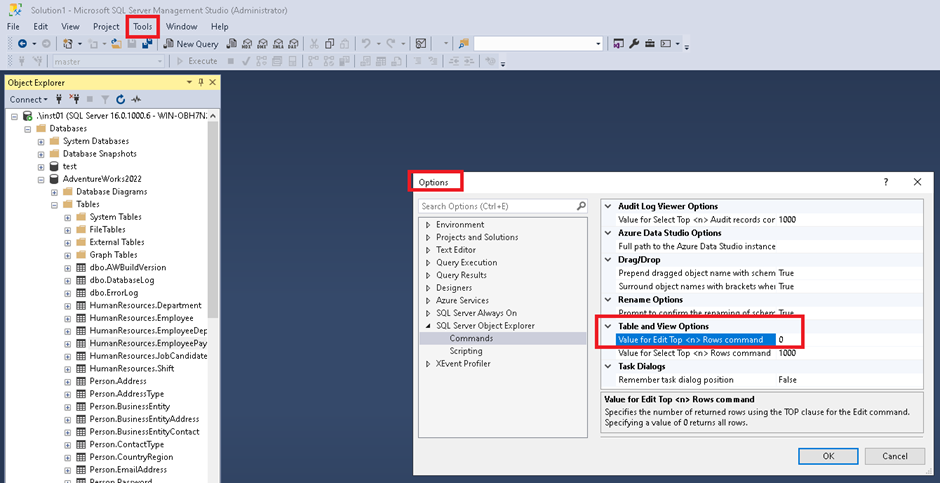

I also try to change the threshold to have all rows in Tools> Options:

But the result is the same:

Conclusion: No workaround, nothing to do except update the SSMS version to a recent one (19 or more) to have again this option available for SQL Server 2022 instances.

Hope this can help you if you have the same issue and you don’t know why…

L’article “Edit Top 200 rows” does not work between SSMS 18.12.1 & SQL Server 2022 est apparu en premier sur dbi Blog.

Power BI – Scheduled refresh stuck

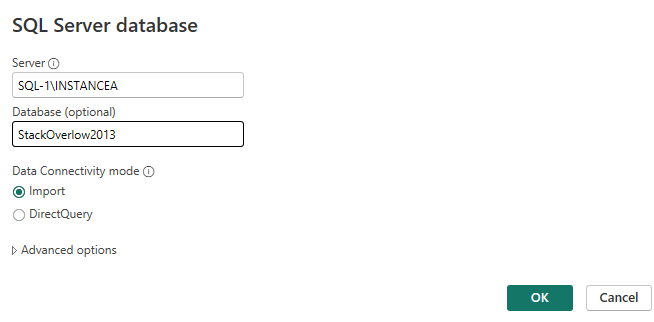

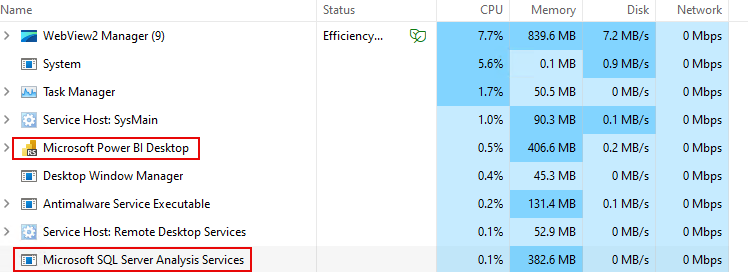

When creating reports, there are several modes through which we can import data. In our case, reports had been created using the “import mode.” In this mode, the data is imported or copied into our reports to be included. This then allows us to build (for example) dashboards.

Once the report is published, the data presented by the report is not automatically updated. Therefore, if data is added between the moment the report is published and the report is displayed, it will not be accessible or visible to the end users.

Here are some details:

- I create my report

- I connect to my datasource

- I choose the “import mode” to import my data

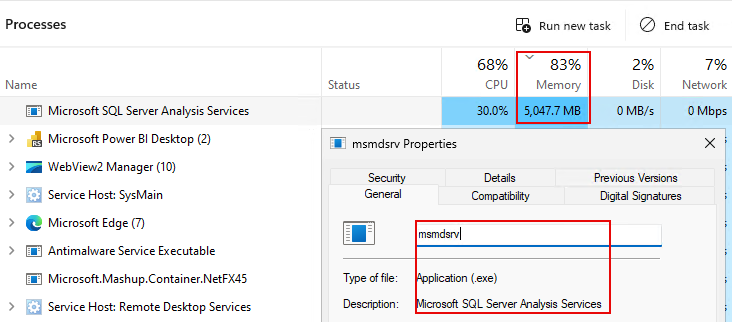

- From what we can see (via this mode), Power BI will call the Microsoft SQL Server Analysis Services to load the data into memory (Power BI launches an instance of SSAS through a separate process).

We can observe this:

- Start of data loading via SSAS

- Data is being loaded via SSAS

Changes are then made to how data is structured and organized (through xVelocity, VertiPaq).

Thus, it is necessary to load the entire dataset before being able to query it. The problem arises when the report has been published and the data is updated after publication. When the report is run, it does not reflect the changes made after its publication.

Here is an example:

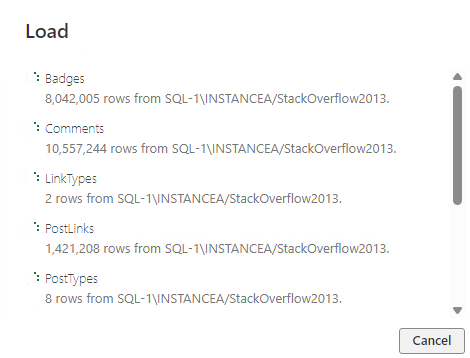

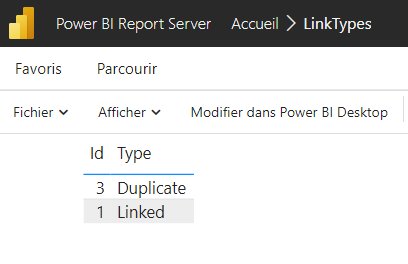

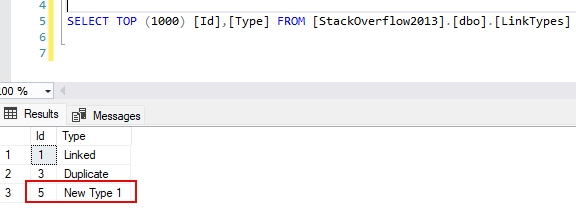

- My report queries the LinkTypes table, which has 2 types of links: “Linked” and “Duplicate”

- Once published, I can see a table displaying this data

- I add a new row to the LinkTypes table

- A new run of the report does not display this data

To address this issue, it is possible to create scheduled refreshes to update the data and ensure the report has up-to-date information.

What is the problem?

What is the problem?

In the case of our client, the scheduled refreshes were not occurring and remained stuck in the “in progress” or “refresh in progress” state. As a result, the reports did not have up-to-date data.

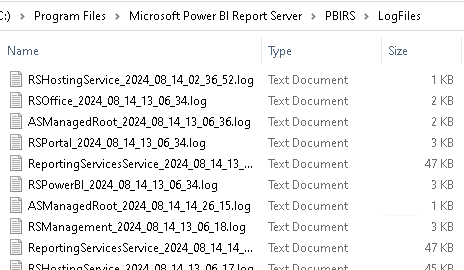

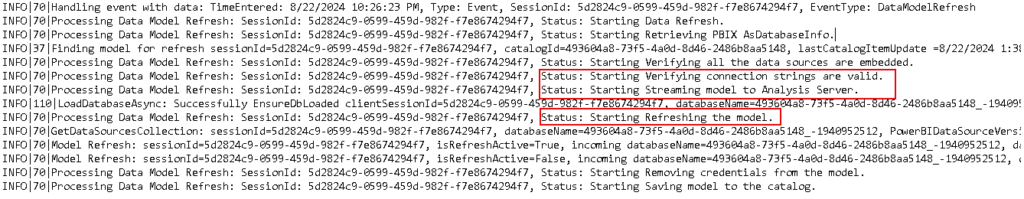

To understand the origin of the problem, we tried to analyze logs:

- We checked the Power BI logs. However, we found nothing. There were no errors (no timeouts, no exceptions, etc)

- The event viewer of the server hosting the Power BI service

- The SQL Server error log that hosts the Power BI databases

- SQL Server waits

- Extended events

- The jobs created by Power BI (which correspond to the scheduled refreshes) did not return any errors

To understand where the problem might be coming from, we need to analyze what happens when a scheduled refresh is requested.

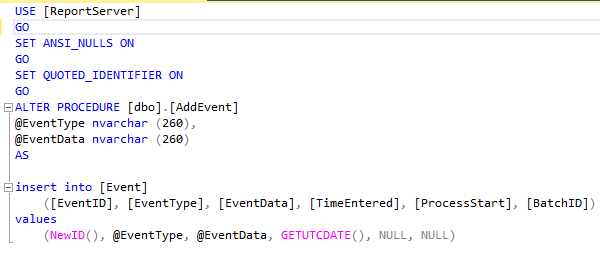

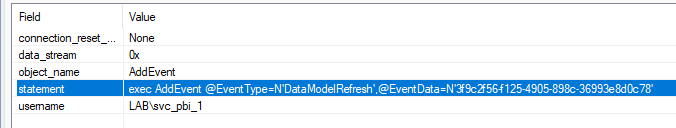

When a scheduled refresh is requested, here are some of the operations that occur:

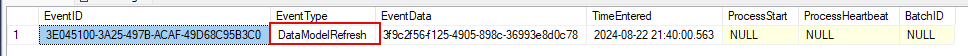

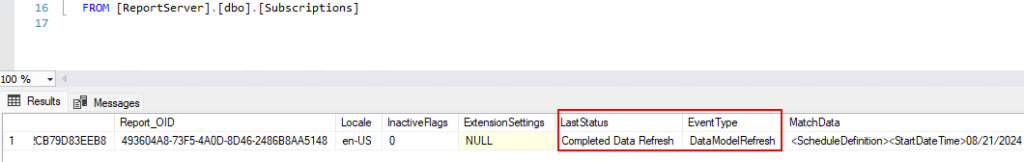

- The stored procedure “AddEvent” is called. This procedure inserts a row into the “Event” table:

- A row is added to the “Event” table

- Power BI is notified that data needs to be updated

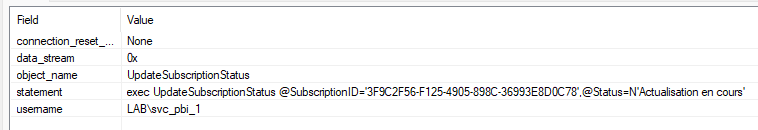

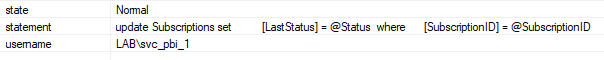

- The status of the subscription is updated (the value of the “@Status” is in Fench because I triggered a scheduled refresh through a web browser that uses French language)

Other objects are then updated, such as the notifications table. The Event table is also updated to remove the corresponding event :

- The TakeEventFromQueue is a stored procedure that calls a delete operation

In our client’s case, the Events table contained data, but actions to execute a scheduled refresh were not adding any rows to the Event table. We decided to clear the contents of the Events table after backing up the database. After this action, the reports started updating correctly.

Thank you, Amine Haloui.

L’article Power BI – Scheduled refresh stuck est apparu en premier sur dbi Blog.

SQL Server: Basic AG – One listener, multiple databases

When it is not possible to have SQL Server Enterprise and there is a need to set up an AlwaysOn cluster, it is possible to use SQL Server Standard and implement basic availability groups.

However, the Standard edition of SQL Server comes with certain limitations when it comes to availability groups.

Here are some of these limitations

- Limited to 2 replicas

- No read-only access on the secondary replica

- No possibility to perform backups on the secondary

- 1 database per availability group

However some clients come with the following constraints

- Install an application that deploys and installs multiple databases

- Use SQL Server Standard

- Use only one listener, as the applications will only use one listener to connect to the primary replica

Requirements

- 1 database = 1 availability group = 0 or 1 listener

- We need to install multiple databases

- Our applications are configured to use only one listener (in their connection string), such as SharePoint, EasyVista, Business Central, M3…

- Therefore we will need to create multiple availability groups in order to benefit from high availability

Here are the questions that arise

If I need to install 10 databases for my application, this requires creating 10 availability groups and therefore 0 or several listeners.

- How can I ensure that all databases are in high availability with only one listener ?

- How can I have only one listener for our applications, multiple availability groups, and one database per availability group ?

- How can this limitation be overcome ?

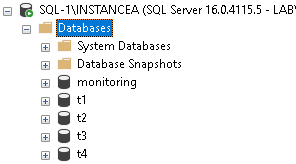

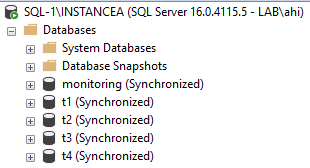

Here is a summary of the solution

- We create our cluster

- We enable the AlwaysOn feature on all replicas

- We create our availability groups

- 1 availability group per database. Each of these availability groups will not contain any listener.

- 1 availability group containing a “monitoring” database and a listener

- When this availability group fails over, all other availability groups fail over as well. This is the “reference” database. All availability groups must share the same primary replica as the availability group that contains the “monitoring” database

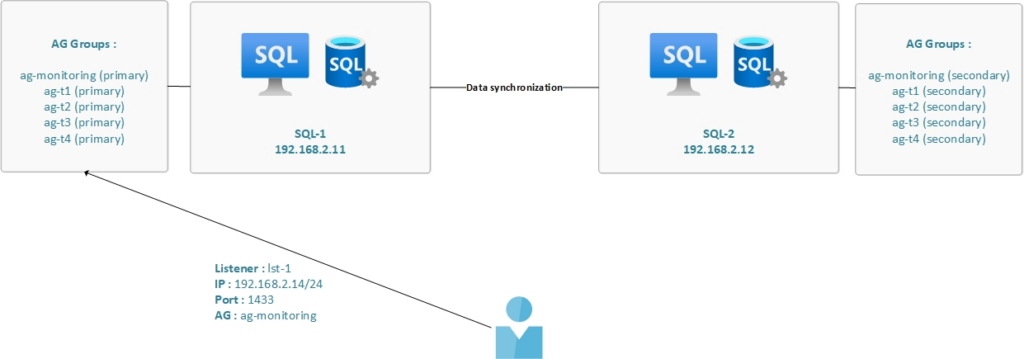

Case 1

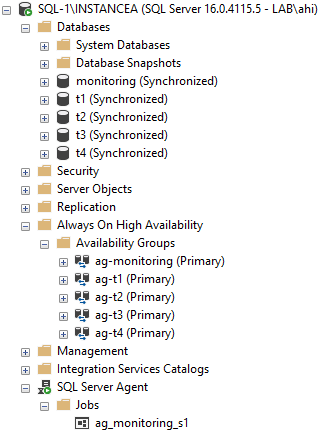

The availability groups share the same primary replica :

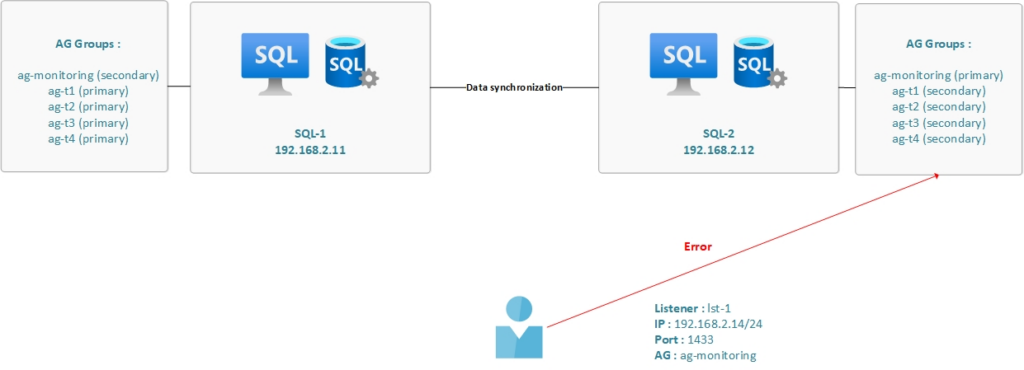

Case 2

The availability groups do not share the same primary replica :

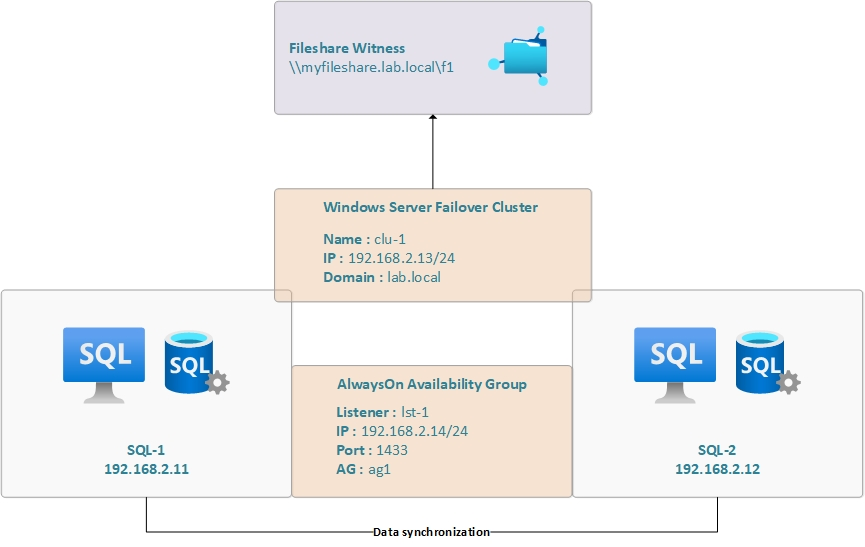

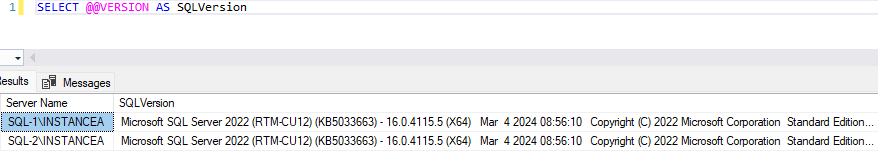

Here are some details about the environment

Virtual machines :

HostnameIPDomainFunctionOSad192.168.2.10lab.localDomain controlerWindows Server 2022sql-1192.168.2.11lab.localReplica 1Windows Server 2022sql-2192.168.2.12lab.localReplica 2Windows Server 2022Failover cluster details

- Failover cluster name : clu-1.lab.local

- IP : 192.168.2.13/24

SQL Server details

ReplicaIPSQL Server versionsql-1192.168.2.11/24SQL Server 2022 Standardsql-2192.168.2.12/24SQL Server 2022 StandardAlwaysOn details

AG nameEndpoint portPortListenerIPag-monitoring50221433lst-1192.168.2.14/24ag-t15022N/AN/AN/Aag-t25022N/AN/AN/Aag-t35022N/AN/AN/Aag-t45022N/AN/AN/A Global architecture

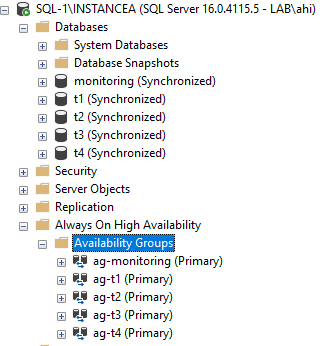

Our SQL Server instances

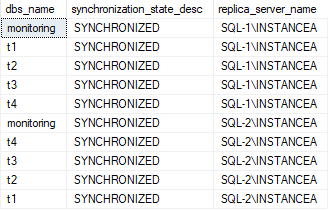

We integrate our databases to the availability groups and we check their states

SELECT

dbs.name as dbs_name,

dhdrs.synchronization_state_desc,

ar.replica_server_name

FROM sys.dm_hadr_database_replica_states AS dhdrs

INNER JOIN sys.availability_replicas AS ar

ON dhdrs.replica_id = ar.replica_id

INNER JOIN sys.databases AS dbs

ON dhdrs.database_id = dbs.database_id

ORDER BY ar.replica_server_name ASC

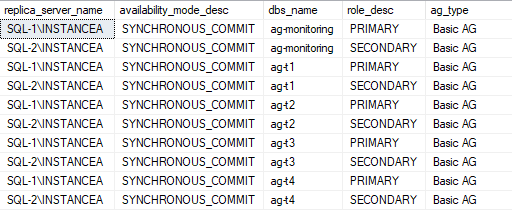

We check the availability groups configuration

SELECT

ar.replica_server_name,

ar.availability_mode_desc,

ag.[name] AS dbs_name,

dhars.role_desc,

CASE

WHEN ag.basic_features = 1 THEN 'Basic AG'

ELSE 'Not Basic AG'

END AS ag_type

FROM sys.availability_replicas AS ar

INNER JOIN sys.availability_groups AS ag

ON ar.group_id = ag.group_id

INNER JOIN sys.dm_hadr_availability_replica_states AS dhars

ON ar.replica_id = dhars.replica_id Solutions

Solutions

We present two solutions to ensure that the availability groups share the same primary replica:

- Solution 1: a job that runs every 5 minutes and checks if the availability groups share the same replica. If they do not, a failover is performed. The “reference” availability group in our case is the group named “ag-monitoring” The other availability groups must share the same primary replica.

- Solution 2: an alert and a job that perform the following tasks:

- The alert is triggered when a failover is detected. The alert then calls a job.

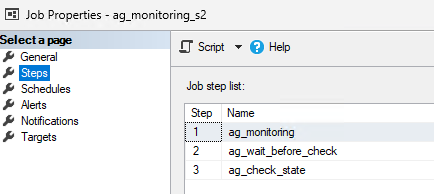

- This job is divided into 3 steps:

- Save the failover date

- Wait for a certain duration before checking the state of the availability groups (the failover may take some time)

- Check the configuration of the availability groups (what their primary replica is)

- If the availability groups ag-t1, ag-t2, ag-t3, and ag-t4 do not share the same primary replica, a failover is performed

- This job is divided into 3 steps:

- The alert is triggered when a failover is detected. The alert then calls a job.

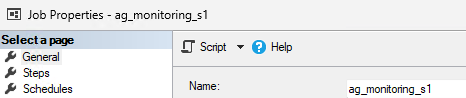

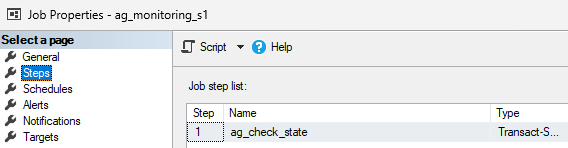

Solution 1

The job is deployed on both replicas. We check if the database is open for read/write before performing any operations.

Job configuration :

Code

IF ((SELECT DATABASEPROPERTYEX('monitoring', 'Updateability')) = 'READ_WRITE')

BEGIN

DECLARE @replica_server NVARCHAR(100),

@ag_name NVARCHAR(100),

@failover_command NVARCHAR(100)

DECLARE cursor_failover_commands CURSOR FOR

WITH T_AG_Monitoring (replica_server, ag_name, role_name)

AS

(

SELECT

ar.replica_server_name,

ag.[name] as ag_name,

dhars.role_desc

FROM sys.availability_replicas AS ar

INNER JOIN sys.availability_groups AS ag

ON ar.group_id = ag.group_id

LEFT JOIN sys.dm_hadr_availability_replica_states as dhars

ON ar.replica_id = dhars.replica_id

WHERE ag.[name] = 'ag-monitoring'

),

T_AG_Databases (replica_server, ag_name, role_name)

AS

(

SELECT

ar.replica_server_name,

ag.[name] as ag_name,

dhars.role_desc

FROM sys.availability_replicas AS ar

INNER JOIN sys.availability_groups AS ag

ON ar.group_id = ag.group_id

LEFT JOIN sys.dm_hadr_availability_replica_states as dhars

ON ar.replica_id = dhars.replica_id

WHERE ag.[name] <> 'ag-monitoring'

) SELECT

T_AG_Databases.replica_server,

T_AG_Databases.ag_name,

CASE

WHEN T_AG_Databases.role_name IS NULL THEN 'ALTER AVAILABILITY GROUP [' + T_AG_Databases.ag_name + '] FAILOVER;'

END AS failover_command

FROM T_AG_Monitoring

RIGHT JOIN T_AG_Databases

ON T_AG_Monitoring.replica_server = T_AG_Databases.replica_server

AND T_AG_Monitoring.role_name = T_AG_Databases.role_name

OPEN cursor_failover_commands

FETCH cursor_failover_commands INTO @replica_server, @ag_name, @failover_command

WHILE @@FETCH_STATUS = 0

BEGIN

IF (LEN(@failover_command) >= 1)

BEGIN

EXEC(@failover_command);

END

FETCH cursor_failover_commands INTO @replica_server, @ag_name, @failover_command

END

CLOSE cursor_failover_commands

DEALLOCATE cursor_failover_commands

ENDSolution 2

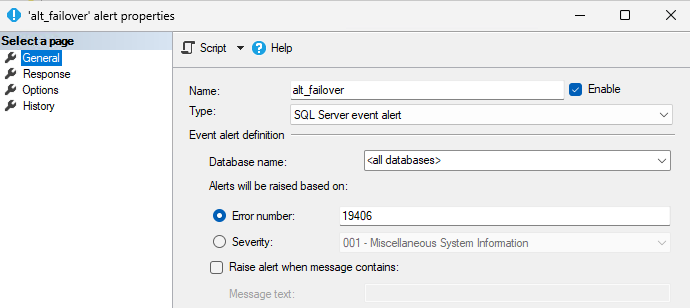

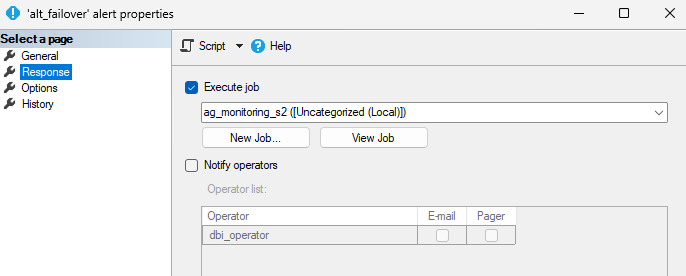

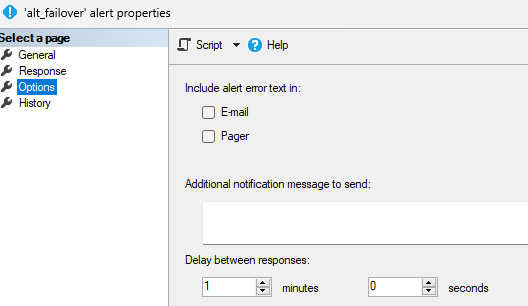

The alert and the job are present on both replicas.

Alert configuration:

Job configuration:

Code :

USE

GO

BEGIN TRANSACTION

DECLARE @ReturnCode INT

SELECT @ReturnCode = 0

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'[Uncategorized (Local)]' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'[Uncategorized (Local)]'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'ag_monitoring_s2',

@enabled=0,

@notify_level_eventlog=0,

@notify_level_email=0,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'No description available.',

@category_name=N'[Uncategorized (Local)]',

@owner_login_name=N'sa', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'ag_monitoring',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=3,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=1,

@retry_interval=1,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'IF ((SELECT DATABASEPROPERTYEX(''monitoring'', ''Updateability'')) = ''READ_WRITE'')

BEGIN

MERGE monitoring.dbo.t_tracefailover_tfr AS t_target

USING (

SELECT

id,

last_occurrence_date,

last_occurrence_time

FROM msdb.dbo.sysalerts

) AS t_source

ON t_source.id = t_target.tfr_id

WHEN NOT MATCHED THEN

INSERT (tfr_id, tfr_last_occurence_date, tfr_last_occurence_time) VALUES (t_source.id, t_source.last_occurrence_date, t_source.last_occurrence_time)

WHEN MATCHED THEN

UPDATE SET t_target.tfr_last_occurence_date = t_source.last_occurrence_date, t_target.tfr_last_occurence_time = t_source.last_occurrence_time;

END

ELSE

BEGIN

PRINT ''Secondary replica'';

END',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'ag_wait_before_check',

@step_id=2,

@cmdexec_success_code=0,

@on_success_action=3,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'BEGIN

WAITFOR DELAY ''00:01'';

END;',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'ag_check_state',

@step_id=3,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'IF ((SELECT DATABASEPROPERTYEX(''monitoring'', ''Updateability'')) = ''READ_WRITE'')

BEGIN

DECLARE @replica_server NVARCHAR(100),

@ag_name NVARCHAR(100),

@failover_command NVARCHAR(100)

DECLARE cursor_failover_commands CURSOR FOR

WITH T_AG_Monitoring (replica_server, ag_name, role_name)

AS

(

SELECT

ar.replica_server_name,

ag.[name] as ag_name,

dhars.role_desc

FROM sys.availability_replicas AS ar

INNER JOIN sys.availability_groups AS ag

ON ar.group_id = ag.group_id

LEFT JOIN sys.dm_hadr_availability_replica_states as dhars

ON ar.replica_id = dhars.replica_id

WHERE ag.[name] = ''ag-monitoring''

),

T_AG_Databases (replica_server, ag_name, role_name)

AS

(

SELECT

ar.replica_server_name,

ag.[name] as ag_name,

dhars.role_desc

FROM sys.availability_replicas AS ar

INNER JOIN sys.availability_groups AS ag

ON ar.group_id = ag.group_id

LEFT JOIN sys.dm_hadr_availability_replica_states as dhars

ON ar.replica_id = dhars.replica_id

WHERE ag.[name] <> ''ag-monitoring''

) SELECT

T_AG_Databases.replica_server,

T_AG_Databases.ag_name,

CASE

WHEN T_AG_Databases.role_name IS NULL THEN ''ALTER AVAILABILITY GROUP ['' + T_AG_Databases.ag_name + ''] FAILOVER;''

END AS failover_command

FROM T_AG_Monitoring

RIGHT JOIN T_AG_Databases

ON T_AG_Monitoring.replica_server = T_AG_Databases.replica_server

AND T_AG_Monitoring.role_name = T_AG_Databases.role_name

OPEN cursor_failover_commands

FETCH cursor_failover_commands INTO @replica_server, @ag_name, @failover_command

WHILE @@FETCH_STATUS = 0

BEGIN

IF (LEN(@failover_command) >= 1)

BEGIN

PRINT ''Failover'';

EXEC(@failover_command);

END

FETCH cursor_failover_commands INTO @replica_server, @ag_name, @failover_command

END

CLOSE cursor_failover_commands

DEALLOCATE cursor_failover_commands

END',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

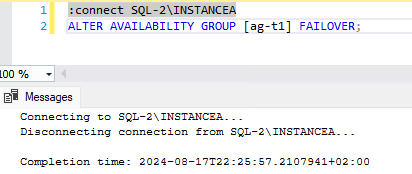

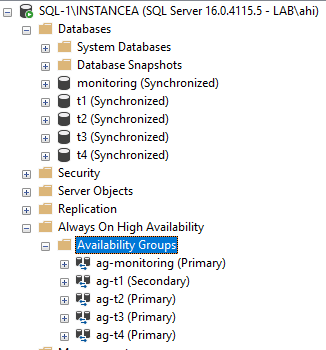

GOFirst solution test :

Initial configuration : the availability groups share the same primary replica.

We perform a failover of the availability group ag-t1.

We check the configuration:

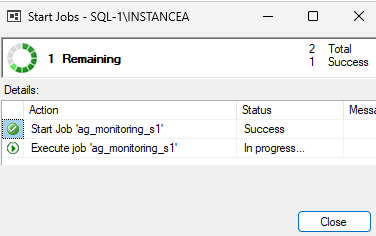

The job is executing:

We check the configuration:

Conclusion

Conclusion

- Solution 1 has 2 drawbacks:

- The job could potentially be triggered during a failover

- The job runs every 5 minutes. Therefore, in the worst case, there could be a 5-minute delay in aligning the availability groups

- Solution 2 is more complex and has the following drawbacks:

- The current backup history is a copy of the dbo.sysalerts table. As a result, the data in this table does not use the GETDATE() function. We could add a column to store the date of the last failover

- The job can be executed multiple times because it is triggered by an alert (alt_failover)

It is also important to verify data synchronization before performing a failover.

Thank you, Amine Haloui.

L’article SQL Server: Basic AG – One listener, multiple databases est apparu en premier sur dbi Blog.

CloudNativePG – Connecting external applications

Now that we know how we can benchmark a CloudNativePG deployment, it is time to look at how we can connect external applications to the PostgreSQL cluster. Usually, applications run in the same Kubernetes cluster and can directly talk to our PostgreSQL deployment, but sometimes it is required to also connect with external applications or services. By default, this does not work, as nothing is exposed externally.

You can easily check this by looking at the services we currently have:

k8s@k8s1:~$ kubectl get services -n default

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 32d

my-pg-cluster-r ClusterIP 10.107.190.52 <none> 5432/TCP 8d

my-pg-cluster-ro ClusterIP 10.109.169.21 <none> 5432/TCP 8d

my-pg-cluster-rw ClusterIP 10.103.171.191 <none> 5432/TCP 8d

There are IP addresses and services for all pods in the cluster, but those addresses are only available inside the cluster. For the external IP addresses there is “<none>” for all of them.

Before we make those services available externally, lets quickly check what they mean:

- my-pg-cluster-r: Connects to any of the nodes for read only operations

- my-pg-cluster-ro: Connects always to a read only replica (hot standby)

- my-pg-cluster-rw: Connects always to the primary node

Whatever connects to the cluster, should us one of those services and never connect to a PostreSQL instance directly. The reason is, that those services are managed by the operator and you should rely on the internal Kubernetes DNS for connecting to the cluster services.

What we need to expose the PostgreSQL cluster services is an Ingress, and an Ingress Controller on top of that in combination with a load balancer.

One of the quite popular Ingress Controllers is the Ingress-Nginx Controller, and this is the one we’re going to use here as well. Getting this installed, can again easily be done by using Helm, in pretty much the same way as we did it with OpenEBS in the storage post, but before we’re going to deploy the METALLB load balancer:

k8s@k8s1:~$ helm install metallb metallb/metallb --namespace metallb-system --create-namespace

NAME: metallb

LAST DEPLOYED: Fri Aug 9 09:43:03 2024

NAMESPACE: metallb-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

MetalLB is now running in the cluster.

Now you can configure it via its CRs. Please refer to the metallb official docs

on how to use the CRs.

This creates a new namespace called “metalllb-system” and a few pods:

k8s@k8s1:~$ kubectl get pods -A | grep metal

metallb-system metallb-controller-77cb7f5d88-hxndw 1/1 Running 0 26s

metallb-system metallb-speaker-5phx6 4/4 Running 0 26s

metallb-system metallb-speaker-bjdxj 4/4 Running 0 26s

metallb-system metallb-speaker-c54z6 4/4 Running 0 26s

metallb-system metallb-speaker-xzphl 4/4 Running 0 26s

The next step is to create the Ingress-Nginx Controller:

k8s@k8s1:~$ helm upgrade --install ingress-nginx ingress-nginx --repo https://kubernetes.github.io/ingress-nginx --namespace ingress-nginx --create-namespace

Release "ingress-nginx" does not exist. Installing it now.

NAME: ingress-nginx

LAST DEPLOYED: Fri Aug 9 09:49:43 2024

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the load balancer IP to be available.

You can watch the status by running 'kubectl get service --namespace ingress-nginx ingress-nginx-controller --output wide --watch'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- pathType: Prefix

backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

Same story here, we get a new namespace:

k8s@k8s1:~$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-69bd47995d-krt7h 1/1 Running 0 2m33s

At this stage, you’ll notice that we still do not have any services exposed externally ( we still see “<pending>” for the EXTERNAL-IP):

k8s@k8s1:~$ kubectl get svc -A | grep nginx

ingress-nginx ingress-nginx-controller LoadBalancer 10.109.240.37 <pending> 80:31719/TCP,443:32412/TCP 103s

ingress-nginx ingress-nginx-controller-admission ClusterIP 10.103.255.169 <none> 443/TCP 103s

This is not a big surprise, as we did not tell the load balancer which IP addresses to request/assign. This is done easily:

k8s@k8s1:~$ cat lb.yaml

---

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default

namespace: metallb-system

spec:

addresses:

- 192.168.122.210-192.168.122.215

autoAssign: true

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- default

k8s@k8s1:~$ kubectl apply -f lb.yaml

ipaddresspool.metallb.io/default created

l2advertisement.metallb.io/default created

k8s@k8s1:~$ kubectl get services -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.109.240.37 192.168.122.210 80:31719/TCP,443:32412/TCP 3m32s

ingress-nginx-controller-admission ClusterIP 10.103.255.169 <none> 443/TCP 3m32s

From now on the LoadBalancer got an IP address automatically assigned from the pool of addresses we’ve assigned. The next steps are covered in the CloudNativePG documentation: First we need a config map for the service we want to expose:

k8s@k8s1:~$ cat tcp-services-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: tcp-services

namespace: ingress-nginx

data:

5432: default/my-pg-cluster-rw:5432

k8s@k8s1:~$ kubectl apply -f tcp-services-configmap.yaml

configmap/tcp-services created

k8s@k8s1:~$ kubectl get cm -n ingress-nginx

NAME DATA AGE

ingress-nginx-controller 1 6m4s

kube-root-ca.crt 1 6m8s

tcp-services 1 12s

Now we need to modify the ingress-nginx service to include the new port:

k8s@k8s1:~$ kubectl get svc ingress-nginx-controller -n ingress-nginx -o yaml > service.yaml

k8s@k8s1:~$ vi service.yaml

...

ports:

- appProtocol: http

name: http

nodePort: 31719

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

nodePort: 32412

port: 443

protocol: TCP

targetPort: https

- appProtocol: tcp

name: postgres

port: 5432

targetPort: 5432

...

k8s@k8s1:~$ kubectl apply -f service.yaml

Warning: resource services/ingress-nginx-controller is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

service/ingress-nginx-controller configured

The last step is to link our config map into the “ingress-nginx-controller” deployment:

k8s@k8s1:~$ kubectl edit deploy ingress-nginx-controller -n ingress-nginx

...

spec:

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --tcp-services-configmap=ingress-nginx/tcp-services

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

- --enable-metrics=false

...

From now on the PostgreSQL cluster can be reached from outside the Kubernetes cluster:

k8s@k8s1:~$ kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.109.240.37 192.168.122.210 80:31719/TCP,443:32412/TCP,5432:32043/TCP 6d23h

ingress-nginx-controller-admission ClusterIP 10.103.255.169 <none> 443/TCP 6d23h

k8s@k8s1:~$ psql -h 192.168.122.210

Password for user k8s:

psql: error: connection to server at "192.168.122.210", port 5432 failed: FATAL: password authentication failed for user "k8s"

connection to server at "192.168.122.210", port 5432 failed: FATAL: password authentication failed for user "k8s"

L’article CloudNativePG – Connecting external applications est apparu en premier sur dbi Blog.

Setting up MariaDB Galera Cluster

In this blog I will show you how you can setup a basic MariaDB Galera Cluster on Debian 11 (bullseye).

If you wish to have a more general overview first you can have a look here: https://mariadb.com/kb/en/what-is-mariadb-galera-cluster/

For this we have 3 servers: galera-cluster-1a/b/c. The following steps will first be executed on my first node, galera-cluster-1a still these steps have to executed on the other nodes as well to build the whole cluster.

First the servers will be prepared for the installation. This includes different packages to be installed and ports to be opened.

galera-cluster-1a:~$ sudo apt update

galera-cluster-1a:~$ sudo apt-get install ufw

galera-cluster-1a:~$ sudo ufw enable

galera-cluster-1a:~$ sudo ufw allow 3306

galera-cluster-1a:~$ sudo ufw allow 4444

galera-cluster-1a:~$ sudo ufw allow 4567

galera-cluster-1a:~$ sudo ufw allow 4568

galera-cluster-1a:~$ sudo ufw allow 22

Installing MariaDB 11.3.2 and other packages

galera-cluster-1a:~$ sudo apt-get install libsnmp-perl -y

galera-cluster-1a:~$ sudo curl -LsS -O https://downloads.mariadb.com/MariaDB/mariadb_repo_setup

galera-cluster-1a:~$ sudo bash mariadb_repo_setup --os-type=debian --os-version=bullseye --mariadb-server-version=11.3.2

galera-cluster-1a:~$ sudo wget http://ftp.us.debian.org/debian/pool/main/r/readline5/libreadline5_5.2+dfsg-3+b13_amd64.deb

galera-cluster-1a:~$ sudo dpkg -i libreadline5_5.2+dfsg-3+b13_amd64.deb

galera-cluster-1a:~$ sudo apt-get update

galera-cluster-1a:~$ sudo apt-get install mariadb-server mariadb-client -y

galera-cluster-1a:~$ sudo systemctl enable mariadb

sudo apt-get install percona-toolkit -y

Now you can login to MariaDB and check the version

galera-cluster-1a:~$ mariadb

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 32

Server version: 11.3.2-MariaDB-1:11.3.2+maria~deb11 mariadb.org binary distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

For the final touch for the basic installation of MariaDB we are going to secure it. Answering yes to all the changes it proposes.

galera-cluster-1a:~$ sudo mariadb-secure-installation

We recommend creating a dedicated backup user for mariabackup like this:

galera-cluster-1a:~$ mariadb

MariaDB [(none)]> create user 'mariabackup'@'%' identified by 'XXX';

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> GRANT RELOAD, PROCESS, LOCK TABLES, REPLICATION CLIENT ON *.* TO 'mariabackup'@'%';

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> create user 'mariabackup'@'localhost' identified by 'XXX';

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> GRANT RELOAD, PROCESS, LOCK TABLES, REPLICATION CLIENT ON *.* TO 'mariabackup'@'localhost';

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

Creating a dedicated directory for logs.

sudo mkdir /var/log/mariadb

sudo chown mysql:mysql /var/log/mariadb

In the /etc/mysql/mariadb.conf.d/50-server.cnf file we add the following options at the end to the [mariadb-11.3] section.

# This group is only read by MariaDB-11.3 servers.

# If you use the same .cnf file for MariaDB of different versions,

# use this group for options that older servers don't understand

[mariadb-11.3]

log_warnings=2

log_error=/var/log/mysql/mariadb.err

general_log = 1

general_log_file = /var/log/mysql/mariadb.log

[mysqldump]

user=dump

password=XXX

max_allowed_packet = 512M

[mariabackup]

user=mariabackup

password=XXX

databases-exclude=lost+found

If you want to use mariabackup for backups and replication you will have to create the user and add the last section [mariabackup]

Step 3: Configuring Galera ClusterChange the galera config file to the following. It is also possible to use the IPs instead of hostnames.

galera-cluster-1a:~$ cat /etc/mysql/mariadb.conf.d/60-galera.cnf

[galera]

# Mandatory settings

wsrep_on = ON

wsrep_cluster_name = MariaDB Galera Cluster

wsrep_cluster_address = gcomm://galera-cluster-1a,galera-cluster-1b,galera-cluster-1c

wsrep_provider = /usr/lib/galera/libgalera_smm.so

binlog_format = row

default_storage_engine = InnoDB

innodb_autoinc_lock_mode = 2

# Allow server to accept connections on all interfaces.

bind-address = 0.0.0.0

# Optional settings

wsrep_slave_threads = 1

innodb_flush_log_at_trx_commit = 0

wsrep_sst_auth = mariabackup:manager

wsrep_sst_method=mariabackup

wsrep_node_address=galera-cluster-1a

wsrep_sst_receive_address= galera-cluster-1a

Now everything is ready and we can start the MariaDB Server in cluster mode.

galera-cluster-1a:~$ sudo systemctl stop mariadb.service

galera-cluster-1a:~$ sudo galera_new_cluster

Lets check if the one node cluster has successfully started. The start position on the very first start should look like the following

galera-cluster-1a:~$ ps -f -u mysql

UID PID PPID C STIME TTY TIME CMD

mysql 2081 1 0 14:37 ? 00:00:00 /usr/sbin/mariadbd --wsrep-new-cluster --wsrep_start_position= 00000000-0000-0000-0000-000000000000:-1

Login to your instance and check the cluster size

galera-cluster-1a:~$ mariadb

MariaDB [(none)]> show global status like 'wsrep_cluster_size';

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 1 |

+--------------------+-------+

MariaDB [(none)]> quit

Repeat steps 1-3 on the other two nodes but you do not have to bootstrap these nodes.

Simply restart the mariadb.service at the end for them to sync with the cluster.

galera-cluster-1b:~$ sudo systemctl restart mariadb.service

galera-cluster-1c:~$ sudo systemctl restart mariadb.service

Afterwards you can check the cluster size again.

galera-cluster-1a:~$ mariadb

MariaDB [(none)]> show global status like 'wsrep_cluster_size';

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 3 |

+--------------------+-------+

Check out this blog about using mariabackup to repair your replication: https://www.dbi-services.com/blog/mariadb-repair-replication-through-db-restore-mariabackup/

L’article Setting up MariaDB Galera Cluster est apparu en premier sur dbi Blog.

Alfresco – Impact of SSL and LB on the import perf.

Have you ever wondered what is the impact of SSL communications or the impact of a specific Load Balancing mechanisms on the performance of your Alfresco environment? Alfresco (the Company at that time, before it became Hyland) ran some benchmark and published the results a few years ago but that might not be very relevant to you as you might be running your infrastructure very differently to what they used. Networking & latency, CPUs, memory, disks, virtualization, etc… All that will have an impact on the performance so you cannot really take external data for granted. In this blog, I will look specifically on the import side of things.

I. Setup detailsRecently, I had a customer which wanted to migrate 3 million documents to Alfresco and they wanted to know how long it could take. This specific environment has 2 Alfresco Content Services (7.x) Nodes in Cluster as well as 2 Alfresco Search Services (Solr6) Nodes using Sharding. It’s not a new environment, it has been running since several years already and has around 10TB of content stored inside. At dbi services, we have a team that can help customers execute Load Tests / Stress Tests for their applications (e.g. this blog). However, that will usually require a certain amount of time to integrate the Load Test software (like JMeter) and the target application as well as to design the needed scenarios beforehand. This customer didn’t really need to pull out the big gun as it was just to get an idea on the import speed. Instead, I proposed to simply script a small importer to be as close as possible to the exact performance that the migration would have, using the REST-API from outside of the Alfresco Cluster Nodes (to consider also the networking & latency), using the same user/permissions/ACLs, etc.

To give a bit more details regarding the setup, there is an Apache HTTPD installed on each Alfresco Nodes. The customer doesn’t have any global load balancer solution (neither hardware nor software) and therefore, to avoid single point of failure (SPOF), the DNS would redirect the traffic to any of the 2 Apache HTTPD, which would then again redirect the traffic to any of the 2 Apache Tomcat hosting the alfresco.war application. That’s one way to do it but there are other solutions possible. Therefore, the question came about what the impact of the SSL communications was exactly as well as what would be the difference if the Load Balancing mechanisms would be different. Like, for example, only redirecting the requests to the local Tomcat and not caring about the second Node. If you do that, of course you might introduce a SPOF, but for the migration purpose, which is very short-lived and that can use a decided URL/PORT, it could be an option (assuming it brings a non-negligeable performance gain).

II. Test casesOn a TEST environment, I decided to slightly update the Apache HTTPD and Apache Tomcat configurations to allow for these test cases:

- Apache HTTPD in HTTPS with Load Balancing (mod_jk) >> standard day-to-day configuration used so far, to avoid SPOF

- Apache HTTPD in HTTP with Load Balancing (mod_jk) >> normally redirect the traffic to HTTPS (above config) but I modified that to send the request to Tomcat instead

- Apache Tomcat in HTTP (bypass Apache HTTPD) >> normally blocked but I allowed it

- Apache HTTPD in HTTPS without Load Balancing (proxy) >> normally doesn’t exist but I added a simple proxy config to send the request to Tomcat instead

- Apache HTTPD in HTTP without Load Balancing (proxy) >> normally doesn’t exist but I added a simple proxy config to send the request to Tomcat instead

To be as close as possible with the real migration for this customer, I took, as input, a few of the smallest documents that will be imported, a few of the biggest (300 times bigger than the smallest) and a few around the average size (30 times bigger than the smallest). I also took different mimetypes like PDF, XML, TXT and the associated expected metadata for all of these. These documents are all using a custom type with ~10 custom properties.

I love bash/shell scripting, it’s definitively not the fastest solution (C/C++, Go, Python, Perl or even Java would be faster) but it’s still decent and above all, it’s simple, so that’s what I used. The goal isn’t to have the best performance here, but just to compare apples to apples. The script itself is pretty simple, it defines a few variables like the REST-API URL to use (which depends on the Access Method chosen), the parent folder under which imports will be done, the username and asks for a password. It takes three parameters as command line arguments, the Access Method to be used, the type of documents to import (small/average/large sizes) and the number of documents to create in Alfresco. For example:

## script_name --access_method --doc_size nb_doc_to_import

./alf_import.sh --apache-https-lb --small 1000

./alf_import.sh --apache-http-lb --average 1000

./alf_import.sh --direct --large 1000

./alf_import.sh --apache-https-nolb --small 1000

./alf_import.sh --apache-http-nolb --small 1000

With these parameters, the script would select the templates to use and their associated metadata and then start a timer and a loop to import all the documents, in a single-thread (meaning 1 after the other). As soon as the last document has been imported, it would stop the timer and provide the import time as outcome. It’s really nothing complex, around 20 lines of code, simple and straightforward.

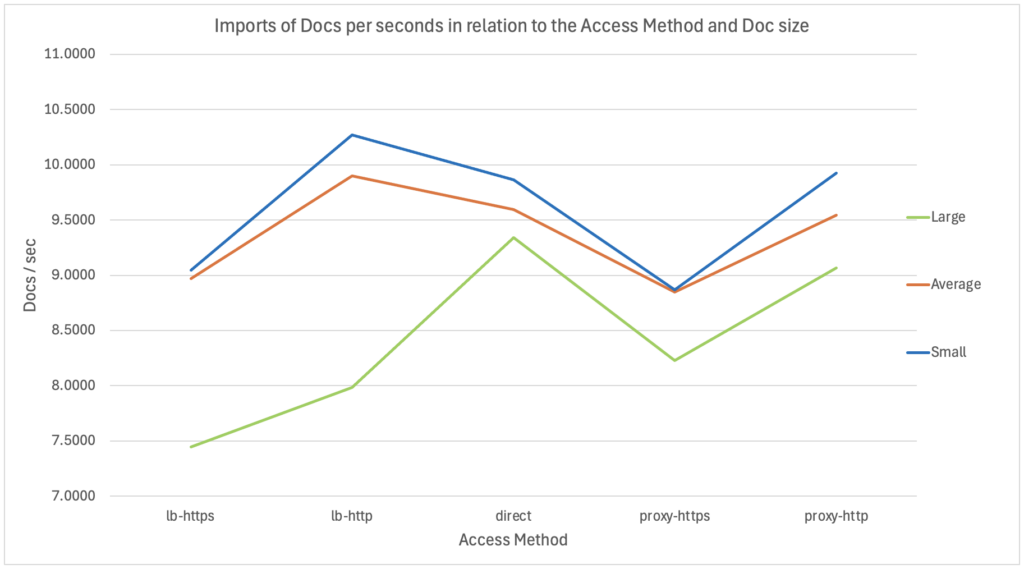

IV. Results – HTTPS vs HTTP & different access methods – Single-threadI did 3 runs of 1000 documents, for each combination possible (between the access method and the document size). I then took the average execution time for the 3 runs which I transformed into an import speed (Docs/sec). The resulting graph looked like that:

As a reminder, this is a single-thread import using REST-API inside a bash/shell script executed from a remote server (through the Network). So, what can we see on these results?

- First, and as I expected, we can see around 10/15% degradation when using HTTPS instead of HTTP.

- Then, between the smallest and the average size documents, we can only see a very small difference (with the same access method): around 1-3%. Which could indicate that the network might not be the limiting factor when documents aren’t too big, since the documents size increased by 30 times while the import speed is “only” 1-3% slower.

- A third interesting point is that with bigger files, the import is noticeably slower. That’s especially true when using the Load Balancing methods, as that means that irrespective of which Apache HTTPD we are talking to, there will be 50% of the requests going to the local Alfresco Node while the remaining 50% will be redirected to the second, remote, Alfresco Node. Therefore, the bigger the document, the slower it will be compared to other methods, as for 50% of the requests, it will need to transfer the document through the network twice (client -> Apache HTTPD + Apache HTTPD -> remote Alfresco Node). With the large documents, the size increased by 10 times (vs average) and the import speed is 10-25% slower.

- In relation to the previous point, there is another interesting thing to note for the small/medium documents. Indeed, even with a single thread execution, using the Load Balancing method is actually 3-4% faster than the direct access to Tomcat and than a plain Reverse Proxy. How could that be? If we consider the network, it should be slower, no? I believe this shows that the Apache HTTPD implementation of the Load Balancing via “mod_jk” is really the most efficient way to access an Apache Tomcat. This difference would probably be even more exacerbated with multi-threads, while doing Load Tests / Stress Tests.

With the previous script, it was possible to test different import/access methods, but it was only using a single thread. This means that new requests would only come in when the previous one was already completed, and the result was returned to the client. That’s obviously not like that in reality, as you might have several users working at the same time, on different things. In terms of Migration, to increase the import speed, you will also most probably have a multi-threaded architecture as it can drastically reduce the time required. In a similar approach, the customer also wanted to see how the system behaves when we add several importers running in parallel.

Therefore, I used a second script, a wrapper of sort, that would trigger/manage/monitor multiple threads executing the first script. The plan is, of course, to provide the exact same command line arguments as before, but we would also need a new one for the number of threads to start. For example:

## script_name --access_method --doc_size nb_doc_to_import nb_threads

./alf_multi_thread.sh --apache-https-lb --small 1000 2

./alf_multi_thread.sh --apache-http-lb --average 1000 6

Most parameters would just be forwarded to the first script, except for the number of threads (obviously) and the number of documents to import. To keep things consistent, the parameter “nb_doc_to_import” should still represent the total number of documents to import and not the number per thread. This is because if you try to import 1000 documents on 6 threads, for example, you will be able to do either 996 (6166) documents or 1002 (6167) but not 1000… Giving 1000 documents to import, the script would do some division with remainder so that the threads #1, #2, #3 and #4 would import 167 documents while the threads #5 and #6 would only import 166 documents. This distribution would be calculated first and then all threads would be started at the same time (+/- 1ms). The script would then monitor the progress of the different threads and report the execution time when everything is completed.

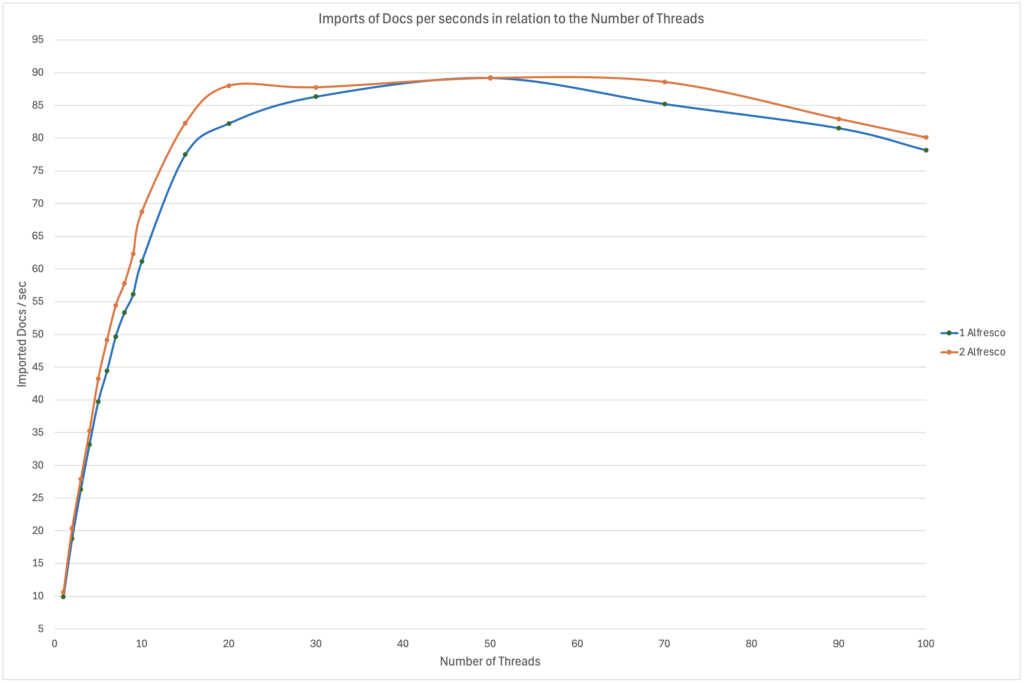

VI. Results – Scaling the import – Multi-threadsAs previously, I did 3 imports of 1000 documents each of took the average time. I executed the imports for 1 to 10 threads as well as 15, 20, 30, 50, 70, 90 and 100 threads. In addition, I did all that with both 1 Alfresco Node or 2 Alfresco Nodes, to be able to compare the speed if only 1 Tomcat is serving 100% of the requests or if the load is shared 50/50. The resulting graph looked like that:

So, what can we see on these results?

- It’s pretty clear that the ingestion speed is increasing in an almost linear way from 1 to ~8/10 threads. The increase then slows down between 10 and 50 threads before the import speed actually starts decreasing from 70 parallel threads. The limit reached and the number of threads seen might just be related to the fact that I was using a bash/shell script and the fact that the OS on which I was running the importer (my client workstation) was obviously limited in terms of processing power. I had only 4 CPUs, so when you try to run 20/50/70 threads on it, it’s bound to reach a threshold where your threads are actually just waiting for some CPU time before it gets executed. Therefore, adding more might not improve the performance and it might actually Have the opposite effect.

- There isn’t much difference in terms of ingestion speed whether we used only 1 or 2 Alfresco Node(s). With 1 to 4 threads, it was ~6% faster to use 2 Alfresco Nodes. From 5 to 10 threads, the gap widens a bit but, in the end, the maximum difference was only ~10%. After 10 parallel threads, the gap reduces again and then the threshold/limit is pretty much the same. You might think something like: “Why is it not 2x faster to use 2 Alfresco Nodes?”. Well, it’s just not enough threads. Whether you are running 6 threads on 1 Alfresco Node or 3 threads on each of 2 Alfresco Nodes (3×2=6), it’s just not enough to see a big difference. The number of threads is fixed, so you need to compare 6 threads in total. With that in mind, this test isn’t sufficient because we are far from what Tomcat can handle and that means the small difference seen is most probably coming from the client I was using and not Alfresco.

In summary, what is the limiting factor here? The CPU of my client workstation? The networking? The Clustering? The DB? It’s pretty hard to say without further testing. For example, adding more CPU and/or other client workstations to spread the source of the requests. Or removing the clustering on this environment so that Alfresco doesn’t need to maintain the clustering-related caches and other behaviours required. In the end, the customer just wanted to get an idea of how the import speed increases with additional threads, so the limit wasn’t really relevant here.

As a closing comment, it actually took much more time to run the tests and gather/analyse the results than to create the scripts used. As mentioned previously, if you would like to do real Load Tests / Stress Tests (or something quick&dirty as here :D), don’t hesitate to contact us, some of my colleagues would be very happy to help.

L’article Alfresco – Impact of SSL and LB on the import perf. est apparu en premier sur dbi Blog.

Automatic Transaction Rollback in Oracle Database 23ai

In the Oracle Database 23ai Enterprise Edition, it is now possible to automatically rollback some transactions, and a set of new parameters have been added to control this behavior.

Of course implementing this feature requires deep understanding of your application, data model and source code.

The general idea :* We don’t want critical transactions to be delayed for too long due to row locks caused by less important transactions.

* The new parameters would allow us to define if a transaction T1 is considered more important than a transaction T2.

* The new parameters would also let us set waiting thresholds, specifying how many seconds T1 will wait for the less important T2 to release the lock before forcing a rollback.

The parameters that are controlling this feature are :

txt_prioritytxn_auto_rollback_modetxn_auto_rollback_high_priority_wait_target txn_auto_rollback_medium_priority_wait_target Let’s see what each parameter means :

1-TXN_PRIORITY

This is where we define each transaction importance, it is used at session level.

We use the alter session command; and HIGH is the default value.

SQL> alter session set txn_priority = HIGH;SQL> alter session set txn_priority = MEDIUM;SQL> alter session set txn_priority = LOW;2 – TXN_AUTO_ROLLBACK_HIGH_PRIORITY_WAIT_TARGET and TXN_AUTO_ROLLBACK_MEDIUM_PRIORITY_WAIT_TARGET :

These are the waiting thresholds in seconds, defined at SYSTEM level.

They controls how many seconds would a high or medium transaction wait for a lesser important transaction over the same row locks.

These targets have the following characteristics :

* The minimum possible value is 1. (1 second)

* It can be set at PDB level, so pluggable databases can have different thresholds.

* In a RAC database, instances can also be set at different values.

For instance, to set a target of 30 seconds for high priority transactions :

SQL> alter system set txn_auto_rollback_high_priority_wait_target = 30;It is very important to note that this does NOT mean a high priority transaction will always wait for “only” 30 seconds.

For instance :

If you have a high transaction T1 waiting for a medium transaction T2, which is waiting for a low transaction T3, all for the same row locks, then the 30 seconds count will only start when T2 receive the lock, so you have to add also the time when T2 had been itself waiting for T3.

3- TXN_AUTO_ROLLBACK_MODE :

This is the feature’s mode, and it is defined at system level.

It may have as a value : “ROLLBACK” (the default) or “TRACK”.

If you set the mode at ‘track’, then the feature will just track and report the cases, no automatic rollback will happen.

So this mode (TRACK) could be a great way to test the feature’s effect before properly implementing it.

This parameter has the following characteristics :

* it is pdb mofifiable, so each pluggable database can have a different value.

* However RAC instances MUST have the same value.

We will do a simple test : We will make a low priority session (Session 1) update a row without commiting, and then try to update the same row in a high priority session (Session 2).

We will also use this case to illustrate that the thresholds are PDB defined, and to check some ways to see statistics about rollback operations.

Let’s connect to our root container and set the priority waiting parameters :

[oracle@localhost ~]$ sqlplus / as sysdba SQL> alter system set "txn_auto_rollback_high_priority_wait_target"=300; System altered. SQL> alter system set "txn_auto_rollback_medium_priority_wait_target"=300; System altered. SQL> select name,value from v$parameter where name like '%txn_priority%'; NAME VALUE ------------------------------------------------------------ ------------------------------------------------------------ txn_priority HIGH txn_auto_rollback_high_priority_wait_target 300 txn_auto_rollback_medium_priority_wait_target 300

The session priority is at HIGH because it is the default, and we have not changed it.

Now let’s connect to our pluggable database and verify our values :

SQL> alter session set container=DEVPDB; Session altered. SQL> select name,value from v$parameter where name like '%txn_priority%'; NAME VALUE ------------------------------------------------------------ ------------------------------------------------------------ txn_priority HIGH txn_auto_rollback_high_priority_wait_target 2147483647 txn_auto_rollback_medium_priority_wait_target 2147483647

The waiting values are different, because it is a pluggable level defined value, 2147483647 seconds is the default. let’s set it at 4 minutes :

SQL> alter system set "txn_auto_rollback_high_priority_wait_target"=160; System altered. SQL> alter system set "txn_auto_rollback_medium_priority_wait_target"=160; System altered. SQL> select name,value from v$parameter where name like '%txn_priority%'; NAME VALUE ------------------------------------------------------------ ------------------------------------------------------------ txn_priority HIGH txn_auto_rollback_high_priority_wait_target 160 txn_auto_rollback_medium_priority_wait_target 160

Now let’s set our current session at LOW priority and then make an update query without doing a commit or rollback :

-- Session 1 : Low priority SQL> alter session set "txn_priority"="LOW"; SQL> select name,value from v$parameter where name like '%txn_priority%'; NAME VALUE ------------------------------------------------------------ ------------------------------------------------------------ txn_priority LOW txn_auto_rollback_high_priority_wait_target 160 txn_auto_rollback_medium_priority_wait_target 160 SQL> update hr.EMPLOYEES set SALARY=10000 where EMPLOYEE_ID=102; 1 row updated. SQL>

Now let’s connect to a new session on the same pluggable database, and try to update the same row :

-- Session 2 : High Priority SQL> select name,value from v$parameter where name like '%txn_priority%'; NAME VALUE ------------------------------------------------------------ ------------------------------------------------------------ txn_priority HIGH txn_auto_rollback_high_priority_wait_target 160 txn_auto_rollback_medium_priority_wait_target 160 SQL> update hr.EMPLOYEES set SALARY=9000 where EMPLOYEE_ID=102;

=> The prompt will not return as the session is waiting for session 1

Back in session 1, you can see the blocking information, please note the specific EVENT (row high priority)

SQL> select sid,event,seconds_in_wait,blocking_session from v$session where event like '%enq%';

SID EVENT

---------- ----------------------------------------------------------------

SECONDS_IN_WAIT BLOCKING_SESSION

--------------- ----------------

42 enq: TX - row (HIGH priority)

132 197

After 4 minutes the prompt in session 2 will return, the query in session 1 had been rollbacked.

if you try to do a commit in session 1, you will receive an ORA-03135 error (Connection lost contact)

-- session 1 SQL> commit; commit * ERROR at line 1: ORA-03135: connection lost contact Process ID: 4905 Session ID: 197 Serial number: 46817 Help : https://docs.oracle.com/error-help/db/ora-03135/ SQL>

Back in session 2, you can do your commit and check the value of the row :

-- Session 2

SQL> commit;

Commit complete.

SQL> select SALARY from hr.EMPLOYEES where EMPLOYEE_ID=102;

SALARY

----------

9000

For statistics about how many rollbacks did you have overall, you can query the v$sysstat view

SQL> select * from v$sysstat where name like '%txns rollback%';

STATISTIC# NAME CLASS VALUE STAT_ID CON_ID

---------- ---------------------------------------------------------------- ---------- ---------- ---------- ----------

1894 txns rollback priority_txns_high_wait_target 384 5 312271427 3

1895 txns rollback priority_txns_medium_wait_target 384 2 3671782541 3

SQL>

In this case, in our pluggable database (con_id=3) :

– 2 times did a medium priority transaction caused the rollback of a low priority transaction.

– 5 times did a high priority transaction caused the rollback of either a medium or low priority transaction.

If you have set you feature’s mode as “TRACK” instead of “ROLLBACK”, then you should search statistics that are like ‘%txns track%’ instead :

SQL> select * from v$sysstat where name like '%txns track%';

STATISTIC# NAME CLASS VALUE STAT_ID CON_ID

---------- ---------------------------------------------------------------- ---------- ---------- ---------- ----------

1896 txns track mode priority_txns_high_wait_target 384 0 2659394049 3

1897 txns track mode priority_txns_medium_wait_target 384 0 1855585812 3

In this case, no occurrences because I did not used it. As mentioned before, this mode (TRACK) could be a great way to test “What would happen” if you implement the feature without causing any rollbacks.

L’article Automatic Transaction Rollback in Oracle Database 23ai est apparu en premier sur dbi Blog.

Hot Clone of a Remote PDB in a Dataguard Environment 19c

In Oracle Database 19c, if you have a Dataguard Configuration of a multitenant database, and you want to clone a pluggable database from a remote server to your primary, then you have to mind that the dataguard mechanism is about applying redo, not copying datafiles : After you execute the clone, the new cloned pluggable will be useless on the standby site.

you can verify that the clone’s standby contains no datafiles by quering the v$datafile view.

You would get something like this :

SQL> select name from v$datafile where con_id=4; NAME -------------------------------------------------------------- /opt/oracle/product/19c/dbhome_1/dbs/UNNAMED00044 /opt/oracle/product/19c/dbhome_1/dbs/UNNAMED00045 /opt/oracle/product/19c/dbhome_1/dbs/UNNAMED00046

The tricky part is that you might not be aware of this, because the dataguard broker will tell you the configuration is fine, and the validate command will confirm that the standby is ready for switchover. (The swithover will indeed work, but you won’t be able to open the new cloned pluggable in the standby site).

One possible solution, if you don’t want to rebuild your standby database after the clone, is to use a transient pluggable copy.

The idea is to :

– First do a clone to a temporary pluggable (or transient) in your primary, using a database link to your remote database.

– Define a “self-refering” database link in your primary database.

– Use this database link to create your pluggable database, which will be usable also on the standby.

It’s not complicated but it requires many steps, so let’s see how it’s done :

The procedureLet’s consider this example :

– We have a multitenant database called QUALCDB :

SQL> select name from v$database;

NAME

---------

QUALCDB

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 QUAL_PDB1 READ WRITE NO

SQL>

This database is protected by a dataguard configuration :

Configuration - qualcdb

Protection Mode: MaxPerformance

Members:

QUALCDB_SITE1 - Primary database

QUALCDB_SITE2 - Physical standby database

Fast-Start Failover: Disabled

Configuration Status:

SUCCESS (status updated 14 seconds ago)

– And a multitenant database called PRODCDB on a remote server, this would be the source of our clone :

SQL> select name from v$database;

NAME

---------

PRODCDB

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

4 PROD_PDB1 READ WRITE NO

5 PROD_PDB2 READ WRITE NO

SQL>

Our objective is to clone PROD_PDB2 into the primary QUALCDB database as QUAL_PDB2, bellow we will execute the following procedure :

– Clone the pluggable PROD_PDB2 to TEMP_QUAL2 on the primary QUALCDB, so this is the clone that is fine on the primary, but useless on the standby

– Create a Database link from the QUALCDB Primary to itself.

– Give this database link as a parameter to the standby database.

– Use this database link to clone TEMP_QUAL2 to our final pluggable QUAL_PDB2, this would our final clone that is fine on the standby.

Let’s do it step by step :

1 – In the source PRODCDB, we create a user that would execute the clone :

-- On the Remote prodcdb database SQL> grant create session, sysoper to C##PDBCLONE identified by Welcome__001 container=all; Grant succeeded. SQL>

2- In the destination QUALCDB, we create a database link to the PRODCDB database :

-- On the Primary SQL> CREATE DATABASE LINK "DBLINK_TO_REMOTEDB" CONNECT TO "C##PDBCLONE" IDENTIFIED BY "Welcome__001" USING 'your_tns_string_to_PRODCDB'; Database link created.

3- We open the root standby in read only :

Please note that in 19c, the Active Data Guard option license is not required when only the CDB$ROOT and PDB$SEED are opened in read only, as long as you ensure that the other users PDBs are always closed when apply is on.

You can find valuable details and ways to test that in this blog : dbi-services blog

-- On the standby

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED MOUNTED

3 QUAL_PDB1 MOUNTED

SQL> alter database open read only;

Database altered.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 QUAL_PDB1 MOUNTED

SQL>

4- We clone prod_pdb2 to a transient database, using the STANDBYS=NONE clause, let’s name the clone TEMP_QUAL2

SQL> select name from v$database;

NAME

---------

QUALCDB

SQL> show con_name

CON_NAME

------------------------------

CDB$ROOT

SQL>

SQL> create pluggable database TEMP_QUAL2 from PROD_PDB2@DBLINK_TO_REMOTEDB STANDBYS=NONE;

Pluggable database created.

SQL> alter pluggable database TEMP_QUAL2 open;

Pluggable database altered.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 QUAL_PDB1 READ WRITE NO

4 TEMP_QUAL2 READ WRITE NO

5- We want to create our QUAL_PDB2 from TEMP_QUAL2, but The temp_qual2 datafiles do not exist on the standby server, so we need something beyond the dataguard redo apply to make it work, this is provided by the standby_pdb_source_file_dblink parameter.

First, we define a “self-db-link” from the primary container to “itself” :

-- On the Primary SQL> show con_name CON_NAME ------------------------------ CDB$ROOT SQL> grant create session, sysoper to C##PDBCLONE identified by Welcome__001 container=all; Grant succeeded. SQL> CREATE DATABASE LINK "SELF_DBLINK" CONNECT TO "C##PDBCLONE" IDENTIFIED BY Welcome__001 USING 'your_primary_database_tns'; Database link created. SQL>

Then we set the standby_pdb_source_file_dblink parameter to this dblink on the standby :

--On the standby SQL> alter system set standby_pdb_source_file_dblink='SELF_DBLINK' scope=both; System altered. SQL>

Now we can create our pluggable using the STANDBYS=ALL clause, please note it is important in this case to restart temp_qual2 in read only mode even if you are not updating it

--On the Primary

SQL> alter pluggable database TEMP_QUAL2 close;

Pluggable database altered.

SQL> alter pluggable database TEMP_QUAL2 open read only;

Pluggable database altered.

SQL> create pluggable database QUAL_PDB2 from TEMP_QUAL2 STANDBYS=ALL;

Pluggable database created.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 QUAL_PDB1 READ WRITE NO

4 TEMP_QUAL2 READ WRITE NO

5 QUAL_PDB2 MOUNTED

SQL> alter pluggable database QUAL_PDB2 open;

Pluggable database altered.

And we are done ! the pluggable standby is OK and contains the files :

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED MOUNTED

3 QUAL_PDB1 MOUNTED

4 TEMP_QUAL2 MOUNTED

5 QUAL_PDB2 MOUNTED

SQL> select name from v$datafile where con_id=5;

NAME

--------------------------------------------------------------------------------

/u02/oradata/QUALCDB/QUALCDB_SITE2/0436816C267F1805E06571B1CAD248EB/datafile/o1_

mf_system_mbd8pzd9_.dbf

/u02/oradata/QUALCDB/QUALCDB_SITE2/1E4D5E04CFDD140AE06573A0AEC465A5/datafile/o1_

mf_sysaux_mbd8pzd9_.dbf

/u02/oradata/QUALCDB/QUALCDB_SITE2/1E4D5E04CFDD140AE06573A0AEC465A5/datafile/o1_

mf_undotbs1_mbd8pzdb_.dbf

At the end, you might want to delete the temporary TEMP_QUAL2 database, and to restart your standby in mount mode if you don’t have the Active Dataguard Licence :

--On the Primary SQL> alter pluggable database TEMP_QUAL2 close; Pluggable database altered. SQL> drop pluggable database TEMP_QUAL2 including datafiles; Pluggable database dropped. SQL> -- On the standby SQL> shutdown immediate Database closed. Database dismounted. ORACLE instance shut down. SQL> startup mount ORACLE instance started. Total System Global Area 1157627856 bytes Fixed Size 9134032 bytes Variable Size 318767104 bytes Database Buffers 822083584 bytes Redo Buffers 7643136 bytes Database mounted. SQL>Final Considerations

– You might prefer to clone your pluggable, then re-create the standby database. This is indeed more straightforward. However, you might use the procedure of this blog if you have Disaster Recovery obligations that force you to keep your standby up and ready all the time, or if your database is too big.

– Starting from 21c, A new feature “PDB Recovery Isolation” solves the problem, a “normal hot clone” is enough. BUT, this feature is only available within the Active Dataguard Licence. Please check this for more details :

Oracle Database Documentation

L’article Hot Clone of a Remote PDB in a Dataguard Environment 19c est apparu en premier sur dbi Blog.

And what about cloning a PDB in a Data Guard environment on ExaCC?

I have recently blogged about how to create a new PDB on ExaCC in a Data Guard environment, see https://www.dbi-services.com/blog/create-new-pdb-in-a-data-guard-environment-on-exacc/.

And what about if I clone a PDB on the primary CDB? Let’s see.

Read more: And what about cloning a PDB in a Data Guard environment on ExaCC? Clone PDB on the primary databaseLet’s clone our previous NEWPDB into NEWPDB_2 with dbaascli.

oracle@ExaCC-chz1-cl01n1:~/ [MYCDB1 (CDB$ROOT)] dbaascli pdb localclone --pdbName NEWPDB --dbName MYCDB --targetPDBName NEWPDB_2 DBAAS CLI version 24.1.2.0.0 Executing command pdb localclone --pdbName NEWPDB --dbName MYCDB --targetPDBName NEWPDB_2 Job id: 584e3b45-7725-4132-a865-88b20a559e4e Session log: /var/opt/oracle/log/MYCDB/pdb/localClone/dbaastools_2024-05-31_10-56-38-AM_394962.log Loading PILOT... Session ID of the current execution is: 7855 Log file location: /var/opt/oracle/log/MYCDB/pdb/localClone/pilot_2024-05-31_10-56-49-AM_399103 ----------------- Running Plugin_initialization job Enter TDE_PASSWORD: ********************* Completed Plugin_initialization job ----------------- Running Validate_input_params job Completed Validate_input_params job ----------------- Running Validate_target_pdb_service_name job Completed Validate_target_pdb_service_name job ----------------- Running Perform_dbca_prechecks job Completed Perform_dbca_prechecks job Acquiring read lock: _u02_app_oracle_product_19.0.0.0_dbhome_2 Acquiring write lock: mycdb Acquiring write lock: newpdb_2 ----------------- Running PDB_creation job Completed PDB_creation job ----------------- Running Load_pdb_details job Completed Load_pdb_details job ----------------- Running Configure_pdb_service job Completed Configure_pdb_service job ----------------- Running Configure_tnsnames_ora job Completed Configure_tnsnames_ora job ----------------- Running Set_pdb_admin_user_profile job Completed Set_pdb_admin_user_profile job ----------------- Running Lock_pdb_admin_user job Completed Lock_pdb_admin_user job ----------------- Running Register_ocids job Skipping. Job is detected as not applicable. ----------------- Running Prepare_blob_for_standby_in_primary job Completed Prepare_blob_for_standby_in_primary job Releasing lock: newpdb_2 Releasing lock: mycdb Releasing lock: _u02_app_oracle_product_19.0.0.0_dbhome_2 ----------------- Running Generate_dbsystem_details job Acquiring native write lock: global_dbsystem_details_generation Releasing native lock: global_dbsystem_details_generation Completed Generate_dbsystem_details job ---------- PLUGIN NOTES ---------- Note: Pluggable database operation is performed on the primary database. In order to successfully complete the operation, the file /var/opt/oracle/log/reg_tmp_files/MYCDB_2024-05-31_10-56-49-AM_399103.tar needs to be copied to the standby database node and additional steps need to be run on all standby databases. Refer to the Cloud Service documentation for the complete steps. ---------- END OF PLUGIN NOTES ---------- dbaascli execution completedChecks

Let’s check new clone on the primary side:

oracle@ExaCC-chz1-cl01n1:~/ [MYCDB1 (CDB$ROOT)] sqh

SQL*Plus: Release 19.0.0.0.0 - Production on Fri May 31 11:00:12 2024

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

4 PDB2 READ WRITE NO

5 PDB3 READ WRITE NO

6 NEWPDB READ WRITE NO

7 NEWPDB_2 READ WRITE NO

SQL>

And on the standby side:

oracle@ExaCC-chz2-cl01n1:~/ [MYCDB1 (CDB$ROOT)] sqh

SQL*Plus: Release 19.0.0.0.0 - Production on Fri May 31 11:00:41 2024

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c EE Extreme Perf Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ ONLY NO

4 PDB2 READ ONLY NO

5 PDB3 READ ONLY NO

6 NEWPDB READ ONLY NO

7 NEWPDB_2 MOUNTED

SQL> select name, total_size/1024/1024/1024 GB from v$pdbs;

NAME GB

-------------------- ----------

PDB$SEED 1.85546875

PDB1 2308.65039

PDB2 1534.89966

PDB3 1095.55273

NEWPDB 4.34375

NEWPDB_2 0

6 rows selected.

As expected the PDB clone on the standby side has been created with the Data Guard redo, but is empty.

Resolve the problemLet’s transfer the BLOB file the initial commands provided us. The file is here:

[opc@ExaCC-chz2-cl01n1 ~]$ sudo mv /tmp/MYCDB_2024-05-31_10-56-49-AM_399103.tar /var/opt/oracle/log/reg_tmp_files/ [opc@ExaCC-chz2-cl01n1 ~]$ sudo chown oracle: /var/opt/oracle/log/reg_tmp_files/MYCDB_2024-05-31_10-56-49-AM_399103.tar

With the blob file as reference, clone the PDB on the standby side. It is the exact same command used on the primary side, with just an additional command --standbyBlobFromPrimary.